Quantum Machine Learning Demos Using Autograd¶

The following example uses the variational quantum circuit interface under pyvqnet.qnn.vqc to implement a quantum machine learning algorithm and example. The variational quantum circuit interface under pyvqnet.qnn.vqc uses state vectors to represent the evolution of quantum states under quantum logic gates, and calculates the gradient in the variational quantum circuit through automatic differentiation.

Please note the use of pyvqnet.qnn.vqc.QMachine in the following example. This class stores the data of quantum state vectors. When calculating batch data or after each measurement, pyvqnet.qnn.vqc.QMachine.reset_states must be performed to reinitialize the state vector data to the batch_size size of the input data.

pyvqnet.qnn.vqc also provides measurement interfaces such as MeasureAll, Probability, and Samples.

In addition, pyvqnet.qnn.vqc.QModule is a class that the user-defined automatic differential sub-circuit model needs to inherit. Like the classic neural network model, it needs to define __init__ and forward functions.

When the last QTensor of the model runs backward, the parameter gradients of the variational sub-circuit in QModule can be calculated using automatic differentiation, and can be updated by the optimizer related to the gradient descent method.

Example of fitting a Fourier series on a GPU using quantum variational circuits¶

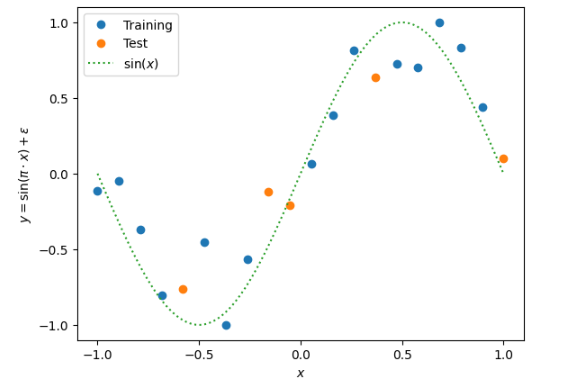

Quantum computers can be used for supervised learning by mapping data inputs to predicted models through parameterized quantum circuits. While much work has been done to study the practical implications of this approach, many important theoretical properties of these models remain unknown. Here, we study how the strategy of encoding data into the model affects the expressive power of parameterized quantum circuits as function approximators.

This example relates common quantum machine learning models designed for quantum computers to Fourier series, following the paper The effect of data encoding on the expressive power of variational quantum machine learning models.

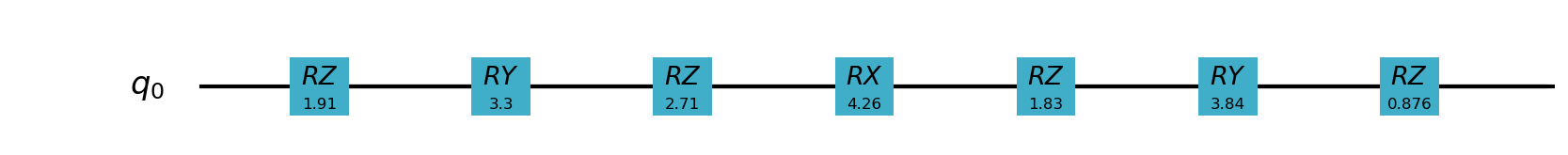

The quantum model is:

Import the necessary libraries and define the variational quantum circuit model using pyvqnet.qnn.vqc:

import numpy as np

from pyvqnet.nn import Module,Parameter

from pyvqnet.nn.loss import MeanSquaredError

from pyvqnet.optim.adam import Adam

from pyvqnet.tensor.tensor import QTensor

from pyvqnet import kfloat32

from pyvqnet.device import DEV_GPU

from pyvqnet.qnn.vqc import QMachine,QModule,rx,rz,ry,\

MeasureAll

np.random.seed(42)

degree = 1 # degree of the target function

scaling = 1 # scaling of the data

coeffs = [0.15 + 0.15j]*degree # coefficients of non-zero frequencies

coeff0 = 0.1 # coefficient of zero frequency

r = 1

weights = 2 * np.pi * np.random.random(size=(r+1, 3)) # some random initial weights

x = np.linspace(-6, 6, 70)

def target_function(x):

"""Generate a truncated Fourier series, where the data gets re-scaled."""

res = coeff0

for idx, coeff in enumerate(coeffs):

exponent = np.complex128(scaling * (idx+1) * x * 1j)

conj_coeff = np.conjugate(coeff)

res += coeff * np.exp(exponent) + conj_coeff * np.exp(-exponent)

return np.real(res)

x = np.linspace(-6, 6, 70)

target_y = np.array([target_function(xx) for xx in x])

def vqc_s(qm,qubits,x):

rx(qm,qubits[0],x)

def vqc_w(qm,qubits,theta):

rz(qm,qubits[0],theta[0])

ry(qm,qubits[0],theta[1])

rz(qm,qubits[0],theta[2])

class QModel(QModule):

def __init__(self,num_qubits):

super().__init__()

self.num_qubits = num_qubits

self.qm = QMachine(num_qubits)

pauli_str_list =[]

for position in range(num_qubits):

pauli_str = {"Z" + str(position): 1.0}

pauli_str_list.append(pauli_str)

self.ma = MeasureAll(obs=pauli_str_list)

self.weights = Parameter((2,3))

def forward(self,x):

self.qm.reset_states(x.shape[0])

qubits = range(self.num_qubits)

vqc_w(self.qm,qubits, self.weights[0])

vqc_s(self.qm,qubits, x)

vqc_w(self.qm,qubits, self.weights[1])

return self.ma(self.qm)

class Model(Module):

def __init__(self):

super(Model, self).__init__()

self.q_fourier_series = QModel(1)

def forward(self, x):

return self.q_fourier_series(x)

Training code, we use GPU for training here, we need to put the model Model and input data, label on GPU using toGPU or specify device.

Other interfaces are no different from the code for training using CPU.

def run():

model = Model()

model.toGPU(DEV_GPU)

optimizer = Adam(model.parameters(), lr=0.5)

batch_size = 2

epoch = 5

loss = MeanSquaredError()

print("start training..............")

model.train()

max_steps = 50

for i in range(epoch):

sum_loss = 0

count = 0

for step in range(max_steps):

optimizer.zero_grad()

# Select batch of data

batch_index = np.random.randint(0, len(x), (batch_size,))

x_batch = x[batch_index].reshape(batch_size,1)

y_batch = target_y[batch_index].reshape(batch_size,1)

#load data into GPU

data, label = QTensor(x_batch,dtype=kfloat32,device=DEV_GPU), QTensor(y_batch,dtype=kfloat32,device=DEV_GPU)

result = model(data)

loss_b = loss(label, result)

loss_b.backward()

optimizer._step()

sum_loss += loss_b.item()

count += batch_size

print(f"epoch:{i}, #### loss:{sum_loss/count} ")

if __name__ == "__main__":

run()

"""

epoch:0, #### loss:0.03909379681921564

epoch:1, #### loss:0.005479114687623223

epoch:2, #### loss:0.019679916104651057

epoch:3, #### loss:0.00861194647848606

epoch:4, #### loss:0.0035393996626953595

"""

HQCNN example of hybrid quantum classical neural network¶

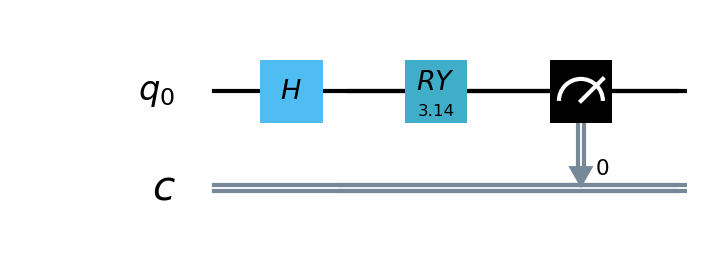

The HQCNN example is implemented using pyvqnet.qnn.vqc, and image classification on the Mnist dataset is performed using a hybrid quantum classical network. In the quantum part, a simple quantum circuit with 1 qubit is defined here, which takes the output of the classical neural network layer as input, encodes quantum data through H, RY logic gates, and calculates the Hamiltonian expectation value in the z direction as output.

Since quantum circuits can be used together with classical neural networks for automatic differentiation, we can use VQNet’s 2D convolution layer Conv2D, pooling layer MaxPool2D, fully connected layer Linear and the quantum circuit just built to build a model.

Through the definition of the Net and Hybrid classes inherited from the VQNet automatic differentiation module Module in the following code, and the definition of data forward calculation in the model forward function forward(), we have built a model that can be automatically differentiated

In this example, the MNIST data is convoluted, dimensionally reduced, quantum encoded, and measured to obtain the final features required for the classification task.

The following is the neural network related code:

import sys

sys.path.insert(0,"../")

import time

import os

import struct

import gzip

from pyvqnet.nn.module import Module

from pyvqnet.nn.linear import Linear

from pyvqnet.nn.conv import Conv2D

from pyvqnet.nn import activation as F

from pyvqnet.nn.pooling import MaxPool2D

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.qnn.vqc import QMachine,QModule,hadamard,ry,MeasureAll

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

class Hybird(QModule):

def __init__(self):

#this super(Hybird, self).__init__() is need

super(Hybird, self).__init__()

self.measure = MeasureAll(obs={"Z0":1})

#use only one qubit to create a qmachine

self.qm = QMachine(1)

def forward(self,x):

#this reset_states must be done to get real batch size.

self.qm.reset_states(x.shape[0])

hadamard(self.qm,[0])

ry(self.qm,[0],x)

return self.measure(q_machine=self.qm)

class Net(Module):

"""

Hybird Quantum Classci Neural Network Module

"""

def __init__(self):

super(Net, self).__init__()

self.conv1 = Conv2D(input_channels=1,

output_channels=6,

kernel_size=(5, 5),

stride=(1, 1),

padding="valid")

self.maxpool1 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.conv2 = Conv2D(input_channels=6,

output_channels=16,

kernel_size=(5, 5),

stride=(1, 1),

padding="valid")

self.maxpool2 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.fc1 = Linear(input_channels=256, output_channels=64)

self.fc2 = Linear(input_channels=64, output_channels=1)

self.hybird = Hybird()

self.fc3 = Linear(input_channels=1, output_channels=2)

def forward(self, x):

start_time_forward = time.time()

x = F.ReLu()(self.conv1(x))

x = self.maxpool1(x)

x = F.ReLu()(self.conv2(x))

x = self.maxpool2(x)

x = tensor.flatten(x, 1)

x = F.ReLu()(self.fc1(x))

x = self.fc2(x)

x = self.hybird(x)

x = self.fc3(x)

return x

The following is data loading, training code:

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download mnist data if needed.

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

def load_mnist(dataset="training_data", digits=np.arange(2), path="./examples"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols))

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def data_select(train_num, test_num):

"""

Select data from mnist dataset.

"""

x_train, y_train = load_mnist("training_data")

x_test, y_test = load_mnist("testing_data")

idx_train = np.append(

np.where(y_train == 0)[0][:train_num],

np.where(y_train == 1)[0][:train_num])

x_train = x_train[idx_train]

y_train = y_train[idx_train]

x_train = x_train / 255

y_train = np.eye(2)[y_train].reshape(-1, 2)

# Test Leaving only labels 0 and 1

idx_test = np.append(

np.where(y_test == 0)[0][:test_num],

np.where(y_test == 1)[0][:test_num])

x_test = x_test[idx_test]

y_test = y_test[idx_test]

x_test = x_test / 255

y_test = np.eye(2)[y_test].reshape(-1, 2)

return x_train, y_train, x_test, y_test

def run():

"""

Run mnist train function

"""

x_train, y_train, x_test, y_test = data_select(100, 50)

model = Net()

optimizer = Adam(model.parameters(), lr=0.005)

loss_func = CategoricalCrossEntropy()

epochs = 10

train_loss_list = []

val_loss_list = []

train_acc_list = []

val_acc_list = []

model.train()

for epoch in range(1, epochs):

total_loss = []

model.train()

batch_size = 3

correct = 0

n_train = 0

for x, y in data_generator(x_train,

y_train,

batch_size=batch_size,

shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer.zero_grad()

output = model(x)

loss = loss_func(y, output)

loss_np = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_train += batch_size

loss.backward()

optimizer._step()

total_loss.append(loss_np)

train_loss_list.append(np.sum(total_loss) / len(total_loss))

train_acc_list.append(np.sum(correct) / n_train)

print("{:.0f} loss is : {:.10f}".format(epoch, train_loss_list[-1]))

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = loss_func(y, output)

loss_np = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

total_loss.append(loss_np)

print(f"Eval Accuracy: {correct / n_eval}")

val_loss_list.append(np.sum(total_loss) / len(total_loss))

val_acc_list.append(np.sum(correct) / n_eval)

if __name__ == "__main__":

run()

"""

1 loss is : 0.6849292357

Eval Accuracy: 0.5

2 loss is : 0.4714432901

Eval Accuracy: 1.0

3 loss is : 0.2898814073

Eval Accuracy: 1.0

4 loss is : 0.1938255936

Eval Accuracy: 1.0

5 loss is : 0.1351640474

Eval Accuracy: 1.0

6 loss is : 0.0998594583

Eval Accuracy: 1.0

7 loss is : 0.0778947517

Eval Accuracy: 1.0

8 loss is : 0.0627411657

Eval Accuracy: 1.0

9 loss is : 0.0519049061

Eval Accuracy: 1.0

"""

Quantum reloading algorithm example¶

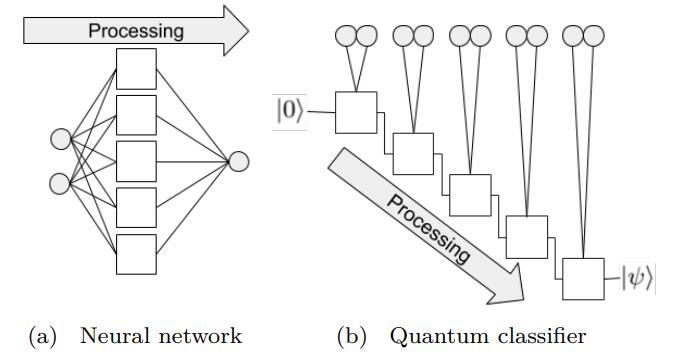

The following uses the interface under pyvqnet.qnn.vqc to build a quantum data re-uploading algorithm example.

In a neural network, each neuron receives information from all neurons in the upper layer (Figure a). In contrast, a single-bit quantum classifier receives the previous information processing unit and input (Figure b).

In layman’s terms, for traditional quantum circuits, when data upload is completed, the result can be directly obtained through several unitary transformations \(U(\theta_1,\theta_2,\theta_3)\).

However, in the quantum data re-uploading (Quantum Data Re-upLoading, QDRL) task, the data needs to be re-uploaded before the unitary transformation.

QDRL vs. Classical Neural Network Schematic Diagram

Import the library and define the quantum neural network model:

import sys

sys.path.insert(0, "../")

import numpy as np

from pyvqnet.nn.linear import Linear

from pyvqnet.qnn.vqc import QMachine,QModule,rz,ry,Probability

from pyvqnet.nn import Parameter

from pyvqnet.optim import sgd

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.nn.module import Module

import matplotlib.pyplot as plt

import matplotlib

from pyvqnet.data import data_generator as get_minibatch_data

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

np.random.seed(42)

class vmodel(QModule):

def __init__(self,nq):

super(vmodel,self).__init__()

self.qm = QMachine(1)

self.nq = nq

self.w = Parameter((9,))

self.ma = Probability(wires=range(nq))

def forward(self,x):

self.qm.reset_states(x.shape[0])

qm = self.qm

w = self.w

rz(qm,0, x[:,[0]])

ry(qm,0, x[:,[1]])

rz(qm,0, x[:,[2]])

rz(qm,0, w[0])

ry(qm,0, w[1])

rz(qm,0, w[2])

rz(qm,0, x[:,[0]])

ry(qm,0, x[:,[1]])

rz(qm,0, x[:,[2]])

rz(qm,0, w[3])

ry(qm,0, w[4])

rz(qm,0, w[5])

rz(qm,0, x[:,[0]])

ry(qm,0, x[:,[1]])

rz(qm,0, x[:,[2]])

rz(qm,0, w[6])

ry(qm,0, w[7])

rz(qm,0, w[8])

return self.ma(qm)

class Model(Module):

def __init__(self):

super(Model, self).__init__()

self.pqc = vmodel(1)

self.fc2 = Linear(2, 2)

def forward(self, x):

x = self.pqc(x)

return x

The following is data loading, training code:

def circle(samples, reps=np.sqrt(1 / 2)):

data_x, data_y = [], []

for _ in range(samples):

x = np.random.rand(2)

y = [0, 1]

if np.linalg.norm(x) < reps:

y = [1, 0]

data_x.append(x)

data_y.append(y)

return np.array(data_x), np.array(data_y)

def plot_data(x, y, fig=None, ax=None):

if fig is None:

fig, ax = plt.subplots(1, 1, figsize=(5, 5))

reds = y == 0

blues = y == 1

ax.scatter(x[reds, 0], x[reds, 1], c="red", s=20, edgecolor="k")

ax.scatter(x[blues, 0], x[blues, 1], c="blue", s=20, edgecolor="k")

ax.set_xlabel("$x_1$")

ax.set_ylabel("$x_2$")

def get_score(pred, label):

pred, label = np.array(pred.data), np.array(label.data)

score = np.sum(np.argmax(pred, axis=1) == np.argmax(label, 1))

return score

model = Model()

optimizer = sgd.SGD(model.parameters(), lr=1)

def train():

"""

Main function for train qdrl model

"""

batch_size = 5

model.train()

x_train, y_train = circle(500)

x_train = np.hstack((x_train, np.ones((x_train.shape[0], 1)))) # 500*3

epoch = 10

print("start training...........")

for i in range(epoch):

accuracy = 0

count = 0

loss = 0

for data, label in get_minibatch_data(x_train, y_train, batch_size):

optimizer.zero_grad()

data, label = QTensor(data), QTensor(label)

output = model(data)

loss_fun = CategoricalCrossEntropy()

losss = loss_fun(label, output)

losss.backward()

optimizer._step()

accuracy += get_score(output, label)

loss += losss.item()

count += batch_size

print(f"epoch:{i}, train_accuracy_for_each_batch:{accuracy/count}")

print(f"epoch:{i}, train_loss_for_each_batch:{loss/count}")

def test():

batch_size = 5

model.eval()

print("start eval...................")

x_test, y_test = circle(500)

test_accuracy = 0

count = 0

x_test = np.hstack((x_test, np.ones((x_test.shape[0], 1))))

for test_data, test_label in get_minibatch_data(x_test, y_test,

batch_size):

test_data, test_label = QTensor(test_data), QTensor(test_label)

output = model(test_data)

test_accuracy += get_score(output, test_label)

count += batch_size

print(f"test_accuracy:{test_accuracy/count}")

if __name__ == "__main__":

train()

test()

"""

start training...........

epoch:0, train_accuracy_for_each_batch:0.828

epoch:0, train_loss_for_each_batch:0.10570884662866592

epoch:1, train_accuracy_for_each_batch:0.866

epoch:1, train_loss_for_each_batch:0.09770179575681687

epoch:2, train_accuracy_for_each_batch:0.878

epoch:2, train_loss_for_each_batch:0.09732778465747834

epoch:3, train_accuracy_for_each_batch:0.86

epoch:3, train_loss_for_each_batch:0.09763735890388489

epoch:4, train_accuracy_for_each_batch:0.864

epoch:4, train_loss_for_each_batch:0.09772944855690002

epoch:5, train_accuracy_for_each_batch:0.848

epoch:5, train_loss_for_each_batch:0.098575089097023

epoch:6, train_accuracy_for_each_batch:0.878

epoch:6, train_loss_for_each_batch:0.09734477716684341

epoch:7, train_accuracy_for_each_batch:0.878

epoch:7, train_loss_for_each_batch:0.09644640237092972

epoch:8, train_accuracy_for_each_batch:0.864

epoch:8, train_loss_for_each_batch:0.09722568172216416

epoch:9, train_accuracy_for_each_batch:0.862

epoch:9, train_loss_for_each_batch:0.09842782151699066

start eval...................

test_accuracy:0.934

"""

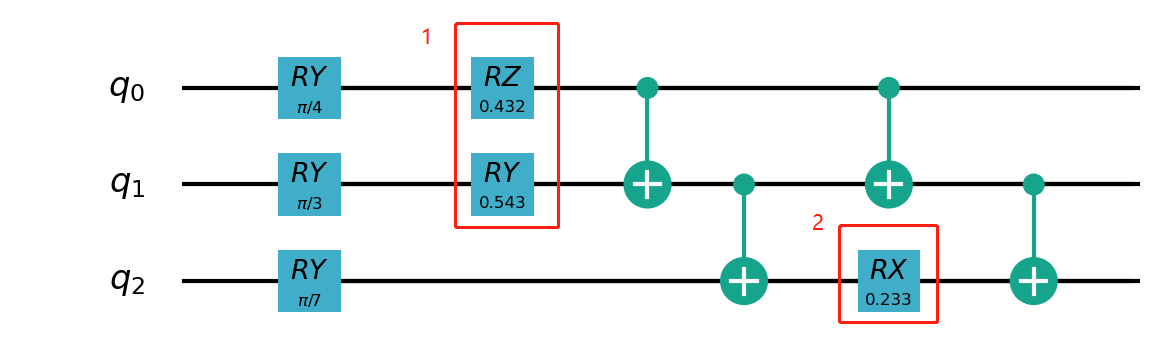

Circuit-centric quantum classifiers algorithm example¶

This example uses pyvqnet.qnn.vqc to implement the variable quantum circuit in the paper Circuit-centric quantum classifiers for binary classification tasks.

This example is used to determine whether a binary number is odd or even. By encoding the binary number into the quantum bit, by optimizing the variable parameters in the circuit, the z-direction measurement value of the circuit can indicate whether the input is odd or even.

A variational quantum circuit usually defines a subcircuit, which is a basic circuit architecture that can build complex variational circuits through repeated layers.

Our circuit layer consists of multiple rotational logic gates and CNOT logic gates that entangle each qubit with its neighboring qubits.

We also need a circuit to encode classical data into quantum states, so that the output measured by the circuit is related to the input.

In this case, we encode the binary input into the corresponding order of qubits. For example, the input data 1101 is encoded into 4 qubits.

import sys

sys.path.insert(0, "../")

import random

import numpy as np

from pyvqnet.optim import sgd

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.dtype import kfloat32,kint64

from pyvqnet.qnn.vqc import QMachine, RX, RY, CNOT, PauliX, qmatrix, PauliZ,qmeasure,qcircuit,VQC_RotCircuit

from pyvqnet.tensor import QTensor, tensor

import pyvqnet

from pyvqnet.nn import Parameter

random.seed(1234)

class QModel(pyvqnet.nn.Module):

def __init__(self, num_wires, dtype):

super(QModel, self).__init__()

self._num_wires = num_wires

self._dtype = dtype

self.qm = QMachine(num_wires, dtype=dtype)

self.w = Parameter((2,4,3),initializer=pyvqnet.utils.initializer.quantum_uniform)

self.cnot = CNOT(wires=[0, 1])

def forward(self, x, *args, **kwargs):

self.qm.reset_states(x.shape[0])

def get_cnot(nqubits,qm):

for i in range(len(nqubits) - 1):

CNOT(wires = [nqubits[i], nqubits[i + 1]])(q_machine = qm)

CNOT(wires = [nqubits[len(nqubits) - 1], nqubits[0]])(q_machine = qm)

def build_circult(weights, xx, nqubits,qm):

def Rot(weights_j, nqubits,qm):

VQC_RotCircuit(qm,nqubits,weights_j)

def basisstate(qm,xx, nqubits):

for i in nqubits:

qcircuit.rz(q_machine=qm, wires=i, params=xx[:,[i]])

qcircuit.ry(q_machine=qm, wires=i, params=xx[:,[i]])

qcircuit.rz(q_machine=qm, wires=i, params=xx[:,[i]])

basisstate(qm,xx,nqubits)

for i in range(weights.shape[0]):

weights_i = weights[i, :, :]

for j in range(len(nqubits)):

weights_j = weights_i[j]

Rot(weights_j, nqubits[j],qm)

get_cnot(nqubits,qm)

build_circult(self.w, x,range(4),self.qm)

return qmeasure.MeasureAll(obs={'Z0': 1})(self.qm)

Data loading, model training process code:

qvc_train_data = [

0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1,

1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1,

1, 1, 1, 0, 1, 1, 1, 1, 1, 0

]

qvc_test_data = [0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0]

batch_size = 5

def dataloader(data, label, batch_size, shuffle=True) -> np:

if shuffle:

for _ in range(len(data) // batch_size):

random_index = np.random.randint(0, len(data), (batch_size, 1))

yield data[random_index].reshape(batch_size,

-1), label[random_index].reshape(

batch_size, -1)

else:

for i in range(0, len(data) - batch_size + 1, batch_size):

yield data[i:i + batch_size], label[i:i + batch_size]

def get_accuary(result, label):

result, label = np.array(result.data), np.array(label.data)

score = np.sum(np.argmax(result, axis=1) == np.argmax(label, 1))

return score

def vqc_get_data(dataset_str):

"""

Tranform data to valid form

"""

if dataset_str == "train":

datasets = np.array(qvc_train_data)

else:

datasets = np.array(qvc_test_data)

datasets = datasets.reshape([-1, 5])

data = datasets[:, :-1]

label = datasets[:, -1].astype(int)

label = label.reshape(-1, 1)

return data, label

def vqc_square_loss(labels, predictions):

loss = 0

loss = (labels - predictions) ** 2

loss = tensor.mean(loss,axis=0)

return loss

def run2():

"""

Main run function

"""

model = QModel(4,pyvqnet.kcomplex64)

optimizer = sgd.SGD(model.parameters(), lr=0.5)

epoch = 25

print("start training..............")

model.train()

datas, labels = vqc_get_data("train")

for i in range(epoch):

sum_loss = 0

count =0

accuary = 0

for data, label in dataloader(datas, labels, batch_size, False):

optimizer.zero_grad()

data, label = QTensor(data,dtype=kfloat32), QTensor(label,dtype=kint64)

result = model(data)

loss_b = vqc_square_loss(label, result)

loss_b.backward()

optimizer._step()

sum_loss += loss_b.item()

count += batch_size

accuary += get_accuary(result, label)

print(

f"epoch:{i}, #### loss:{sum_loss/count} #####accuray:{accuary/count}"

)

run2()

"""

epoch:0, #### loss:0.07805998176336289 #####accuray:1.0

epoch:1, #### loss:0.07268960326910019 #####accuray:1.0

epoch:2, #### loss:0.06934810429811478 #####accuray:1.0

epoch:3, #### loss:0.06652230024337769 #####accuray:1.0

epoch:4, #### loss:0.06363258957862854 #####accuray:1.0

epoch:5, #### loss:0.0604777917265892 #####accuray:1.0

epoch:6, #### loss:0.05711844265460968 #####accuray:1.0

epoch:7, #### loss:0.053814482688903806 #####accuray:1.0

epoch:8, #### loss:0.05088095813989639 #####accuray:1.0

epoch:9, #### loss:0.04851257503032684 #####accuray:1.0

epoch:10, #### loss:0.04672074168920517 #####accuray:1.0

epoch:11, #### loss:0.04540069997310638 #####accuray:1.0

epoch:12, #### loss:0.04442296177148819 #####accuray:1.0

epoch:13, #### loss:0.04368099868297577 #####accuray:1.0

epoch:14, #### loss:0.04310029000043869 #####accuray:1.0

epoch:15, #### loss:0.04263183027505875 #####accuray:1.0

epoch:16, #### loss:0.04224379360675812 #####accuray:1.0

epoch:17, #### loss:0.041915199160575865 #####accuray:1.0

epoch:18, #### loss:0.04163179695606232 #####accuray:1.0

epoch:19, #### loss:0.041383542120456696 #####accuray:1.0

epoch:20, #### loss:0.0411631852388382 #####accuray:1.0

epoch:21, #### loss:0.04096531867980957 #####accuray:1.0

epoch:22, #### loss:0.04078584611415863 #####accuray:1.0

epoch:23, #### loss:0.0406215637922287 #####accuray:1.0

epoch:24, #### loss:0.040470016002655027 #####accuray:1.0

"""

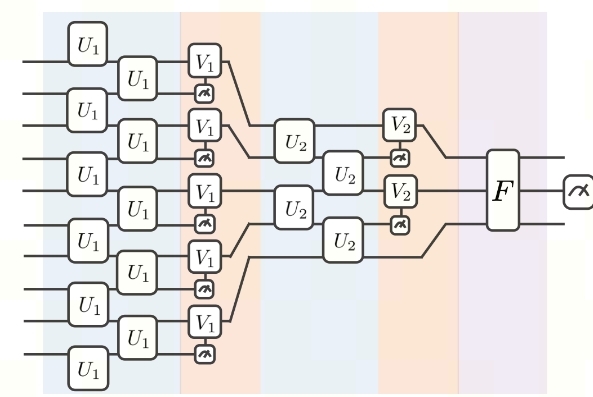

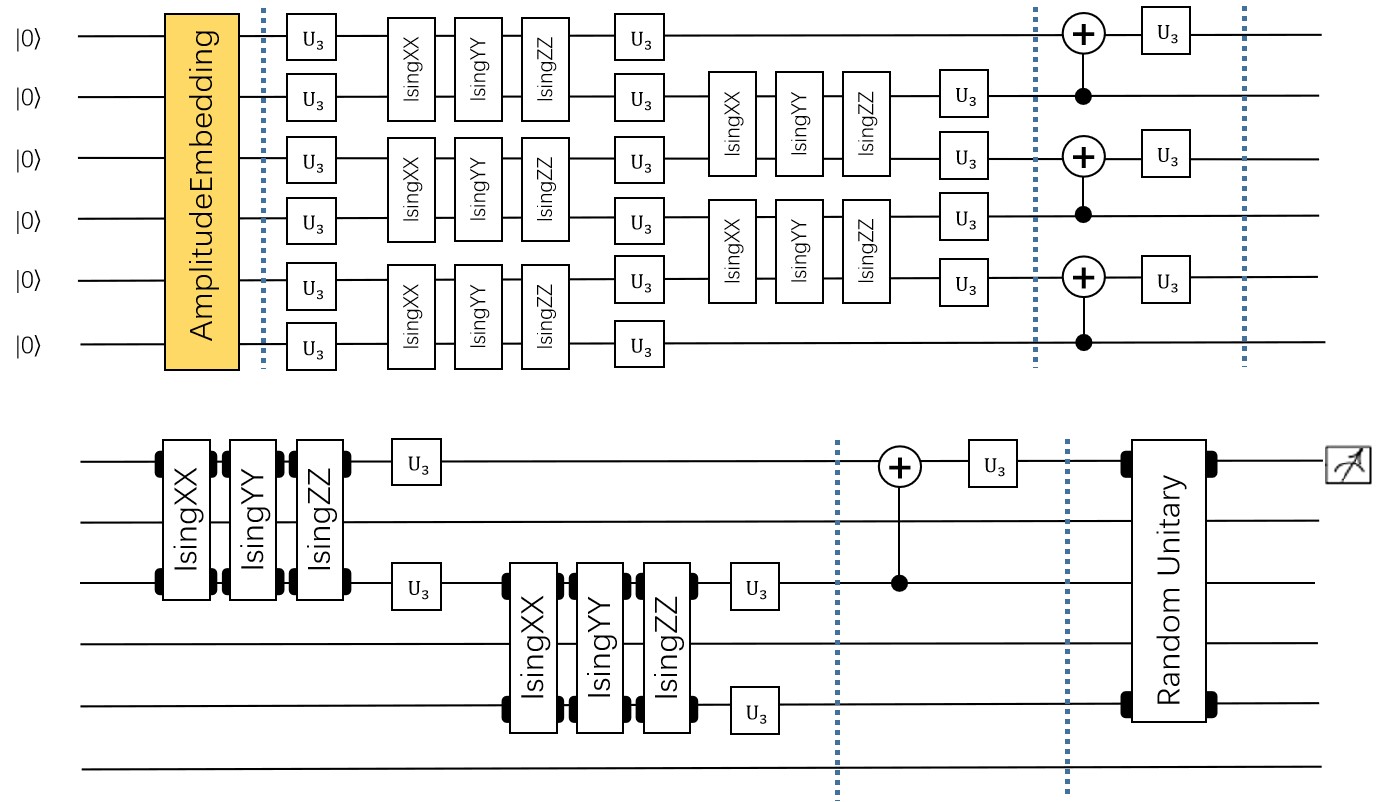

Variational Shadow Quantum Learning for Classification model example¶

Use the variable quantum circuit interface of pyvqnet.qnn.vqc to build a 2-classification model. When comparing the classification accuracy with a neural network with similar parameter accuracy, the two have similar accuracy. However, the number of parameters in the quantum circuit is much smaller than that of the classical neural network.

The algorithm is based on the paper: Variational Shadow Quantum Learning for Classification Model Reproduction.

The overall model of VSQL quantum is as follows:

Define the variational quantum circuit model:

import sys

sys.path.insert(0,"../")

import os

import os.path

import struct

import gzip

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.qnn.measure import expval

from pyvqnet.qnn.quantumlayer import QuantumLayer

from pyvqnet.qnn.template import AmplitudeEmbeddingCircuit

from pyvqnet.nn.linear import Linear

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

from pyvqnet.qnn.vqc import rx,ry,cnot,vqc_amplitude_embedding,QMachine,QModule,MeasureAll

from pyvqnet.nn import Parameter

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

class VQC_VSQL(QModule):

def __init__(self,nq):

super(VQC_VSQL,self).__init__()

self.qm = QMachine(nq)

self.nq =nq

self.w = Parameter(( (depth + 1) * 3 * n_qsc,))

pauli_str_list =[]

def forward(self,x):

def get_pauli_str(n_start, n_qsc):

D = {}

D['wires']= [i for i in range(n_start, n_start + n_qsc)]

D["observables"] = ["X" for i in range(n_start, n_start + n_qsc)]

D["coefficient"] = [1 for i in range(n_start, n_start + n_qsc)]

return D

#this reset states to shape of batchsize

self.qm.reset_states(x.shape[0])

weights = self.w.reshape([depth + 1, 3, n_qsc])

def subcir(qm, weights, qlist, depth, n_qsc, n_start):

for i in range(n_qsc):

rx(qm,qlist[n_start + i], weights[0,0,i])

ry(qm,qlist[n_start + i], weights[0,1,i])

rx(qm,qlist[n_start + i], weights[0,2,i])

for repeat in range(1, depth + 1):

for i in range(n_qsc - 1):

cnot(qm,[qlist[n_start + i], qlist[n_start + i + 1]])

not(qm,[qlist[n_start + n_qsc - 1], qlist[n_start]])

for i in range(n_qsc):

ry(qm,qlist[n_start + i], weights[repeat,1,i])

qm = self.qm

vqc_amplitude_embedding(x,q_machine=qm)

f_i = []

for st in range(n - n_qsc + 1):

psd = get_pauli_str(st, n_qsc)

subcir(qm, weights, range(self.nq), depth, n_qsc, st)

ma =MeasureAll(obs=psd)

f_ij = ma(qm)

f_i.append(f_ij)

return tensor.cat(f_i,1)#->(Batch,n - n_qsc + 1)

class QModel(Module):

"""

Model of VSQL

"""

def __init__(self):

super().__init__()

self.vq = VQC_VSQL(n)

self.fc = Linear(n - n_qsc + 1, 2)

def forward(self, x):

x = self.vq(x)

x = self.fc(x)

return x

Define the data loading and training process code:

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

#GLOBAL VAR

n = 10

n_qsc = 2

depth = 1

def _download(dataset_dir, file_name):

"""

Download function for mnist dataset file

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

def load_mnist(dataset="training_data",

digits=np.arange(2),

path="examples"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols),dtype=np.float32)

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def show_image():

image, _ = load_mnist()

for img in range(len(image)):

plt.imshow(image[img])

plt.show()

def run_vsql():

"""

VQSL MODEL

"""

digits = [0, 1]

x_train, y_train = load_mnist("training_data", digits)

x_train = x_train / 255

y_train = y_train.reshape(-1, 1)

y_train = np.eye(len(digits))[y_train].reshape(-1, len(digits)).astype(np.int64)

x_test, y_test = load_mnist("testing_data", digits)

x_test = x_test / 255

y_test = y_test.reshape(-1, 1)

y_test = np.eye(len(digits))[y_test].reshape(-1, len(digits)).astype(np.int64)

x_train_list = []

x_test_list = []

for i in range(x_train.shape[0]):

x_train_list.append(

np.pad(x_train[i, :, :].flatten(), (0, 240),

constant_values=(0, 0)))

x_train = np.array(x_train_list)

for i in range(x_test.shape[0]):

x_test_list.append(

np.pad(x_test[i, :, :].flatten(), (0, 240),

constant_values=(0, 0)))

x_test = np.array(x_test_list)

x_train = x_train[:500]

y_train = y_train[:500]

x_test = x_test[:100]

y_test = y_test[:100]

print("model start")

model = QModel()

optimizer = Adam(model.parameters(), lr=0.1)

model.train()

result_file = open("./result/vqslrlt.txt", "w")

for epoch in range(1, 3):

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 5

correct = 0

for x, y in data_generator(x_train,

y_train,

batch_size=batch_size,

shuffle=True):

optimizer.zero_grad()

try:

x = x.reshape(batch_size, 1024)

except:

x = x.reshape(-1, 1024)

output = model(x)

cceloss = CategoricalCrossEntropy()

loss = cceloss(y, output)

loss.backward()

optimizer._step()

full_loss += loss.item()

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

print(f" n_loss {n_loss} Train Accuracy: {correct/n_loss} ")

print(f"Train Accuracy: {correct/n_loss} ")

print(f"Epoch: {epoch}, Loss: {full_loss / n_loss}")

result_file.write(f"{epoch}\t{full_loss / n_loss}\t{correct/n_loss}\t")

# Evaluation

model.eval()

print("eval")

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test,

y_test,

batch_size=batch_size,

shuffle=True):

x = x.reshape(1, 1024)

output = model(x)

cceloss = CategoricalCrossEntropy()

loss = cceloss(y, output)

full_loss += loss.item()

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

n_eval += 1

n_loss += 1

print(f"Eval Accuracy: {correct/n_eval}")

result_file.write(f"{full_loss / n_loss}\t{correct/n_eval}\n")

result_file.close()

del model

print("\ndone vqsl\n")

if __name__ == "__main__":

run_vsql()

"""

model start

n_loss 5 Train Accuracy: 0.4

n_loss 10 Train Accuracy: 0.4

n_loss 15 Train Accuracy: 0.4

n_loss 20 Train Accuracy: 0.35

n_loss 25 Train Accuracy: 0.44

n_loss 30 Train Accuracy: 0.43333333333333335

n_loss 35 Train Accuracy: 0.4857142857142857

n_loss 40 Train Accuracy: 0.525

n_loss 45 Train Accuracy: 0.5777777777777777

n_loss 50 Train Accuracy: 0.58

n_loss 55 Train Accuracy: 0.5818181818181818

n_loss 60 Train Accuracy: 0.5833333333333334

n_loss 65 Train Accuracy: 0.5692307692307692

n_loss 70 Train Accuracy: 0.5714285714285714

n_loss 75 Train Accuracy: 0.5733333333333334

n_loss 80 Train Accuracy: 0.6

n_loss 85 Train Accuracy: 0.611764705882353

n_loss 90 Train Accuracy: 0.6111111111111112

n_loss 95 Train Accuracy: 0.631578947368421

n_loss 100 Train Accuracy: 0.63

n_loss 105 Train Accuracy: 0.638095238095238

n_loss 110 Train Accuracy: 0.6545454545454545

n_loss 115 Train Accuracy: 0.6434782608695652

n_loss 120 Train Accuracy: 0.65

n_loss 125 Train Accuracy: 0.664

n_loss 130 Train Accuracy: 0.6692307692307692

n_loss 135 Train Accuracy: 0.674074074074074

n_loss 140 Train Accuracy: 0.6857142857142857

n_loss 145 Train Accuracy: 0.6827586206896552

n_loss 150 Train Accuracy: 0.6933333333333334

n_loss 155 Train Accuracy: 0.6967741935483871

n_loss 160 Train Accuracy: 0.7

n_loss 165 Train Accuracy: 0.696969696969697

n_loss 170 Train Accuracy: 0.7058823529411765

n_loss 175 Train Accuracy: 0.7142857142857143

n_loss 180 Train Accuracy: 0.7222222222222222

n_loss 185 Train Accuracy: 0.7297297297297297

n_loss 190 Train Accuracy: 0.7368421052631579

n_loss 195 Train Accuracy: 0.7435897435897436

n_loss 200 Train Accuracy: 0.74

n_loss 205 Train Accuracy: 0.7463414634146341

n_loss 210 Train Accuracy: 0.7476190476190476

n_loss 215 Train Accuracy: 0.7488372093023256

n_loss 220 Train Accuracy: 0.7545454545454545

n_loss 225 Train Accuracy: 0.76

n_loss 230 Train Accuracy: 0.7565217391304347

n_loss 235 Train Accuracy: 0.7617021276595745

n_loss 240 Train Accuracy: 0.7666666666666667

n_loss 245 Train Accuracy: 0.7714285714285715

n_loss 250 Train Accuracy: 0.776

n_loss 255 Train Accuracy: 0.7803921568627451

n_loss 260 Train Accuracy: 0.7846153846153846

n_loss 265 Train Accuracy: 0.7849056603773585

n_loss 270 Train Accuracy: 0.7888888888888889

n_loss 275 Train Accuracy: 0.7927272727272727

n_loss 280 Train Accuracy: 0.7892857142857143

n_loss 285 Train Accuracy: 0.7929824561403509

n_loss 290 Train Accuracy: 0.7965517241379311

n_loss 295 Train Accuracy: 0.8

n_loss 300 Train Accuracy: 0.8

n_loss 305 Train Accuracy: 0.8032786885245902

n_loss 310 Train Accuracy: 0.8064516129032258

n_loss 315 Train Accuracy: 0.8095238095238095

n_loss 320 Train Accuracy: 0.8125

n_loss 325 Train Accuracy: 0.8153846153846154

n_loss 330 Train Accuracy: 0.8181818181818182

n_loss 335 Train Accuracy: 0.8208955223880597

n_loss 340 Train Accuracy: 0.8235294117647058

n_loss 345 Train Accuracy: 0.8260869565217391

n_loss 350 Train Accuracy: 0.8285714285714286

n_loss 355 Train Accuracy: 0.8309859154929577

n_loss 360 Train Accuracy: 0.8277777777777777

n_loss 365 Train Accuracy: 0.8301369863013699

n_loss 370 Train Accuracy: 0.8324324324324325

n_loss 375 Train Accuracy: 0.8346666666666667

n_loss 380 Train Accuracy: 0.8368421052631579

n_loss 385 Train Accuracy: 0.8389610389610389

n_loss 390 Train Accuracy: 0.841025641025641

n_loss 395 Train Accuracy: 0.8430379746835444

n_loss 400 Train Accuracy: 0.845

n_loss 405 Train Accuracy: 0.8469135802469135

n_loss 410 Train Accuracy: 0.848780487804878

n_loss 415 Train Accuracy: 0.8506024096385543

n_loss 420 Train Accuracy: 0.8523809523809524

n_loss 425 Train Accuracy: 0.8541176470588235

n_loss 430 Train Accuracy: 0.8558139534883721

n_loss 435 Train Accuracy: 0.8574712643678161

n_loss 440 Train Accuracy: 0.8590909090909091

n_loss 445 Train Accuracy: 0.8606741573033708

n_loss 450 Train Accuracy: 0.8622222222222222

n_loss 455 Train Accuracy: 0.8637362637362638

n_loss 460 Train Accuracy: 0.8652173913043478

n_loss 465 Train Accuracy: 0.864516129032258

n_loss 470 Train Accuracy: 0.8659574468085106

n_loss 475 Train Accuracy: 0.8673684210526316

n_loss 480 Train Accuracy: 0.8666666666666667

n_loss 485 Train Accuracy: 0.8680412371134021

n_loss 490 Train Accuracy: 0.8673469387755102

n_loss 495 Train Accuracy: 0.8686868686868687

n_loss 500 Train Accuracy: 0.87

Train Accuracy: 0.87

Epoch: 1, Loss: 0.0713323565647006

eval

Eval Accuracy: 0.95

n_loss 5 Train Accuracy: 1.0

n_loss 10 Train Accuracy: 1.0

n_loss 15 Train Accuracy: 1.0

n_loss 20 Train Accuracy: 1.0

n_loss 25 Train Accuracy: 1.0

n_loss 30 Train Accuracy: 0.9333333333333333

n_loss 35 Train Accuracy: 0.9428571428571428

n_loss 40 Train Accuracy: 0.925

n_loss 45 Train Accuracy: 0.9333333333333333

n_loss 50 Train Accuracy: 0.92

n_loss 55 Train Accuracy: 0.9272727272727272

n_loss 60 Train Accuracy: 0.9333333333333333

n_loss 65 Train Accuracy: 0.9230769230769231

n_loss 70 Train Accuracy: 0.9285714285714286

n_loss 75 Train Accuracy: 0.9066666666666666

n_loss 80 Train Accuracy: 0.9

n_loss 85 Train Accuracy: 0.9058823529411765

n_loss 90 Train Accuracy: 0.9111111111111111

n_loss 95 Train Accuracy: 0.9157894736842105

n_loss 100 Train Accuracy: 0.92

n_loss 105 Train Accuracy: 0.9238095238095239

n_loss 110 Train Accuracy: 0.9272727272727272

n_loss 115 Train Accuracy: 0.9304347826086956

n_loss 120 Train Accuracy: 0.9333333333333333

n_loss 125 Train Accuracy: 0.936

n_loss 130 Train Accuracy: 0.9307692307692308

n_loss 135 Train Accuracy: 0.9333333333333333

n_loss 140 Train Accuracy: 0.9285714285714286

n_loss 145 Train Accuracy: 0.9310344827586207

n_loss 150 Train Accuracy: 0.9333333333333333

n_loss 155 Train Accuracy: 0.9354838709677419

n_loss 160 Train Accuracy: 0.9375

n_loss 165 Train Accuracy: 0.9333333333333333

n_loss 170 Train Accuracy: 0.9352941176470588

n_loss 175 Train Accuracy: 0.9371428571428572

n_loss 180 Train Accuracy: 0.9388888888888889

n_loss 185 Train Accuracy: 0.9405405405405406

n_loss 190 Train Accuracy: 0.9421052631578948

n_loss 195 Train Accuracy: 0.9435897435897436

n_loss 200 Train Accuracy: 0.935

n_loss 205 Train Accuracy: 0.9317073170731708

n_loss 210 Train Accuracy: 0.9333333333333333

n_loss 215 Train Accuracy: 0.9348837209302325

n_loss 220 Train Accuracy: 0.9272727272727272

n_loss 225 Train Accuracy: 0.9244444444444444

n_loss 230 Train Accuracy: 0.9217391304347826

n_loss 235 Train Accuracy: 0.9234042553191489

n_loss 240 Train Accuracy: 0.925

n_loss 245 Train Accuracy: 0.926530612244898

n_loss 250 Train Accuracy: 0.928

n_loss 255 Train Accuracy: 0.9294117647058824

n_loss 260 Train Accuracy: 0.926923076923077

n_loss 265 Train Accuracy: 0.9283018867924528

n_loss 270 Train Accuracy: 0.9222222222222223

n_loss 275 Train Accuracy: 0.9236363636363636

n_loss 280 Train Accuracy: 0.925

n_loss 285 Train Accuracy: 0.9263157894736842

n_loss 290 Train Accuracy: 0.9206896551724137

n_loss 295 Train Accuracy: 0.9220338983050848

n_loss 300 Train Accuracy: 0.9233333333333333

n_loss 305 Train Accuracy: 0.9245901639344263

n_loss 310 Train Accuracy: 0.9258064516129032

n_loss 315 Train Accuracy: 0.926984126984127

n_loss 320 Train Accuracy: 0.928125

n_loss 325 Train Accuracy: 0.9292307692307692

n_loss 330 Train Accuracy: 0.9303030303030303

n_loss 335 Train Accuracy: 0.9313432835820895

n_loss 340 Train Accuracy: 0.9323529411764706

n_loss 345 Train Accuracy: 0.9333333333333333

n_loss 350 Train Accuracy: 0.9342857142857143

n_loss 355 Train Accuracy: 0.9352112676056338

n_loss 360 Train Accuracy: 0.9333333333333333

n_loss 365 Train Accuracy: 0.9315068493150684

n_loss 370 Train Accuracy: 0.9324324324324325

n_loss 375 Train Accuracy: 0.9333333333333333

n_loss 380 Train Accuracy: 0.9315789473684211

n_loss 385 Train Accuracy: 0.9324675324675324

n_loss 390 Train Accuracy: 0.9333333333333333

n_loss 395 Train Accuracy: 0.9316455696202531

n_loss 400 Train Accuracy: 0.9325

n_loss 405 Train Accuracy: 0.9333333333333333

n_loss 410 Train Accuracy: 0.9317073170731708

n_loss 415 Train Accuracy: 0.9325301204819277

n_loss 420 Train Accuracy: 0.9333333333333333

n_loss 425 Train Accuracy: 0.9341176470588235

n_loss 430 Train Accuracy: 0.9348837209302325

n_loss 435 Train Accuracy: 0.9356321839080459

n_loss 440 Train Accuracy: 0.9363636363636364

n_loss 445 Train Accuracy: 0.9348314606741573

n_loss 450 Train Accuracy: 0.9355555555555556

n_loss 455 Train Accuracy: 0.9362637362637363

n_loss 460 Train Accuracy: 0.9369565217391305

n_loss 465 Train Accuracy: 0.9376344086021505

n_loss 470 Train Accuracy: 0.9382978723404255

n_loss 475 Train Accuracy: 0.9368421052631579

n_loss 480 Train Accuracy: 0.9375

n_loss 485 Train Accuracy: 0.9381443298969072

n_loss 490 Train Accuracy: 0.936734693877551

n_loss 495 Train Accuracy: 0.9373737373737374

n_loss 500 Train Accuracy: 0.938

Train Accuracy: 0.938

Epoch: 2, Loss: 0.036427834063768386

eval

Eval Accuracy: 0.95

done vqsl

"""

QMLP Model Example¶

The following code implements a quantum multilayer perceptron (QMLP) architecture featuring error-tolerant input embedding, rich nonlinearities, and enhanced variational circuit simulation with parameterized two-qubit entangled gates. QMLP: An Error-Tolerant Nonlinear Quantum MLP Architecture using Parameterized Two-Qubit Gates .

First ,we use pyvqnet.qnn.vqc api to define a qnn.

import os

import gzip

import struct

import numpy as np

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import MeanSquaredError, CrossEntropyLoss

from pyvqnet.optim.adam import Adam

from pyvqnet.qnn.measure import expval

from pyvqnet.nn.pooling import AvgPool2D

from pyvqnet.nn.linear import Linear

from pyvqnet.data.data import data_generator

from pyvqnet.qnn.vqc import QMachine,QModule,rot, crx,rx,MeasureAll

from pyvqnet.nn import Parameter

import matplotlib

from matplotlib import pyplot as plt

try:

matplotlib.use("TkAgg")

except: # pylint:disable=bare-except

print("Can not use matplot TkAgg")

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

def vqc_rot_cir(qm,weights,qubits):

for i in range(len(qubits)):

rot(q_machine=qm,wires=qubits[i], params= weights[3*i:3*i+3])

def vqc_crot_cir(qm,weights,qubits):

for i in range(len(qubits)):

crx(q_machine=qm,wires=[qubits[i],qubits[(i+1)%len(qubits)]], params= weights[i])

class build_qmlp_vqc(QModule):

def __init__(self,nq):

super(build_qmlp_vqc,self).__init__()

self.qm = QMachine(nq)

self.nq =nq

self.w =Parameter((nq*8,))

pauli_str_list =[]

for position in range(nq):

pauli_str = {"Z" + str(position): 1.0}

pauli_str_list.append(pauli_str)

self.ma = MeasureAll(obs=pauli_str_list)

def forward(self,x):

self.qm.reset_states(x.shape[0])

num_qubits = self.nq

for i in range(num_qubits):

rx(self.qm,i,x[:,[i]])# use[:,i] will get shape of (batch),which is not valid for rx gates.

vqc_rot_cir(self.qm,self.w[0:num_qubits*3],range(self.nq))

vqc_crot_cir(self.qm,self.w[num_qubits*3:num_qubits*4],range(self.nq))

for i in range(num_qubits):

rx(self.qm,i,x[:,[i]])

vqc_rot_cir(self.qm,self.w[num_qubits*4:num_qubits*7],range(self.nq))

vqc_crot_cir(self.qm,self.w[num_qubits*7:num_qubits*8],range(self.nq))

return self.ma(self.qm)

class QMLPModel(Module):

def __init__(self):

super(QMLPModel, self).__init__()

self.ave_pool2d = AvgPool2D([7, 7], [7, 7], "valid")

self.quantum_circuit = build_qmlp_vqc(4)

self.linear = Linear(4, 10)

def forward(self, x):

bsz = x.shape[0]

x = self.ave_pool2d(x)

input_data = x.reshape([bsz, 16])

quanutum_result = self.quantum_circuit(input_data)

result = self.linear(quanutum_result)

return result

The following code is the training data loading and training process code:

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download mnist data if needed.

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

def load_mnist(dataset="training_data", digits=np.arange(2), path="examples"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols))

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def data_select(train_num, test_num):

"""

Select data from mnist dataset.

"""

x_train, y_train = load_mnist("training_data")

x_test, y_test = load_mnist("testing_data")

idx_train = np.append(

np.where(y_train == 0)[0][:train_num],

np.where(y_train == 1)[0][:train_num])

x_train = x_train[idx_train]

y_train = y_train[idx_train]

x_train = x_train / 255

y_train = np.eye(2)[y_train].reshape(-1, 2)

# Test Leaving only labels 0 and 1

idx_test = np.append(

np.where(y_test == 0)[0][:test_num],

np.where(y_test == 1)[0][:test_num])

x_test = x_test[idx_test]

y_test = y_test[idx_test]

x_test = x_test / 255

y_test = np.eye(2)[y_test].reshape(-1, 2)

return x_train, y_train, x_test, y_test

def vqnet_test_QMLPModel():

train_size = 1000

eval_size = 100

x_train, y_train = load_mnist("training_data", digits=np.arange(10))

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:train_size]

y_train = y_train[:train_size]

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = QMLPModel()

optimizer = Adam(model.parameters(), lr=0.005)

loss_func = CrossEntropyLoss()

loss_list = []

epochs = 30

for epoch in range(1, epochs):

total_loss = []

correct = 0

n_train = 0

for x, y in data_generator(x_train,

y_train,

batch_size=16,

shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer.zero_grad()

# Forward pass

output = model(x)

# Calculating loss

loss = loss_func(y, output)

loss_np = np.array(loss.data)

print("loss: ", loss_np)

np_output = np.array(output.data, copy=False)

temp_out = np_output.argmax(axis=1)

temp_output = np.zeros((temp_out.size, 10))

temp_output[np.arange(temp_out.size), temp_out] = 1

temp_maks = (temp_output == y)

correct += np.sum(np.array(temp_maks))

n_train += 160

# Backward pass

loss.backward()

# Optimize the weights

optimizer._step()

total_loss.append(loss_np)

print("##########################")

print(f"Train Accuracy: {correct / n_train}")

loss_list.append(np.sum(total_loss) / len(total_loss))

# train_acc_list.append(correct / n_train)

print("epoch: ", epoch)

# print(100. * (epoch + 1) / epochs)

print("{:.0f} loss is : {:.10f}".format(epoch, loss_list[-1]))

if __name__ == "__main__":

vqnet_test_QMLPModel()

"""

##########################

Train Accuracy: 0.8111111111111111

epoch: 1

1 loss is : 1.5855706836

##########################

Train Accuracy: 0.8128968253968254

epoch: 2

2 loss is : 0.5768806215

##########################

Train Accuracy: 0.8128968253968254

epoch: 3

3 loss is : 0.3712821839

##########################

Train Accuracy: 0.8128968253968254

epoch: 4

4 loss is : 0.3419296628

##########################

Train Accuracy: 0.8128968253968254

epoch: 5

5 loss is : 0.3328191666

##########################

Train Accuracy: 0.8128968253968254

epoch: 6

6 loss is : 0.3280464354

##########################

Train Accuracy: 0.8128968253968254

epoch: 7

7 loss is : 0.3252888937

##########################

Train Accuracy: 0.8128968253968254

epoch: 8

8 loss is : 0.3235242934

##########################

Train Accuracy: 0.8128968253968254

epoch: 9

9 loss is : 0.3226686205

##########################

Train Accuracy: 0.8128968253968254

epoch: 10

10 loss is : 0.3220652020

"""

Example of implementing a reinforcement learning algorithm using a quantum classical hybrid neural network model¶

Load necessary libraries and define global variables

import numpy as np

import random

import gym

import time

from matplotlib import animation

from pyvqnet.nn import Module,Parameter

from pyvqnet.nn.loss import MeanSquaredError

from pyvqnet.optim.adam import Adam

from pyvqnet.tensor import tensor,QTensor

from pyvqnet import kfloat32

from pyvqnet.qnn.vqc import u3,cnot,rx,ry,rz,\

QMachine,QModule,MeasureAll

import matplotlib

from matplotlib import pyplot as plt

try:

matplotlib.use("TkAgg")

except: # pylint:disable=bare-except

print("Can not use matplot TkAgg")

def display_frames_as_gif(frames, c_index):

patch = plt.imshow(frames[0])

plt.axis('off')

def animate(i):

patch.set_data(frames[i])

anim = animation.FuncAnimation(plt.gcf(), animate, frames=len(frames), interval=5)

name_result = "./result_"+str(c_index)+".gif"

anim.save(name_result, writer='pillow', fps=10)

CIRCUIT_SIZE = 4

MAX_ITERATIONS = 50

MAX_STEPS = 250

BATCHSIZE = 5

TARGET_MAX = 20

GAMMA = 0.99

STATE_T = 0

ACTION = 1

REWARD = 2

STATE_NT = 3

DONE = 4

n_qubits= 4

n_layers=2

env = gym.make("FrozenLake-v1", is_slippery = False, map_name = '4x4')

state = env.reset()

targ_counter = 0

sampled_vs = []

memory = {}

param = QTensor(0.01 * np.random.randn(n_layers, n_qubits, 3))

bias = QTensor([[0.0, 0.0, 0.0, 0.0]])

The following code are quantum neural network definition:

def layer_circuit(qm,qubits, weights):

# Entanglement block

cnot(qm, [qubits[0], qubits[1]])

cnot(qm, [qubits[1], qubits[2]])

cnot(qm, [qubits[2], qubits[3]])

# u3 gate

for i in range(len(qubits)):

u3(qm,qubits[i],weights[i])

def encoder(encodings):

encodings = int(encodings.to_numpy()[0])

return [i for i, b in enumerate(f'{encodings:0{CIRCUIT_SIZE}b}') if b == '1']

class QDRL_MODULE(QModule):

def __init__(self,num_qubits,n_layers=2):

super().__init__()

self.num_qubits = num_qubits

self.qm = QMachine(num_qubits)

self.weights = Parameter((n_layers,num_qubits,3))

pauli_str_list = []

for position in range(self.num_qubits):

pauli_str = {"Z" + str(position): 1.0}

pauli_str_list.append(pauli_str)

self.ma = MeasureAll(obs=pauli_str_list)

def forward(self,x):

qubits = range(self.num_qubits)

all_ma = []

# x is batch data

for i in range(x.shape[0]):

# each data should have unique initial statesvector

self.qm.reset_states(1)

if x[i]:

wires = encoder(x[i])

for wire in wires:

rx(self.qm,qubits[wire], np.pi)

rz(self.qm,qubits[wire], np.pi)

for w in range(self.weights.shape[0]):

layer_circuit(self.qm,qubits, self.weights[w])

all_ma.append(self.ma(self.qm))

return tensor.cat(all_ma)

class DRLModel(Module):

def __init__(self):

super(DRLModel, self).__init__()

self.quantum_circuit = QDRL_MODULE(n_qubits,n_layers)

def forward(self, x):

quanutum_result = self.quantum_circuit(x)

return quanutum_result

Training code:

param_targ = param.copy().reshape([1, -1]).to_numpy()

bias_targ = bias.copy()

loss_func = MeanSquaredError()

model = DRLModel()

opt = Adam(model.parameters(), lr=5)

for i in range(MAX_ITERATIONS):

start = time.time()

state_t = env.reset()

a_init = env.action_space.sample()

total_reward = 0

done = False

frames = []

for t in range(MAX_STEPS):

frames.append(env.render(mode='rgb_array'))

time.sleep(0.1)

input_x = QTensor([[state_t]],dtype=kfloat32)

acts = model(input_x) + bias

# print(f'type of acts: {type(acts)}')

act_t = tensor.QTensor.argmax(acts)

# print(f'act_t: {act_t} type of act_t: {type(act_t)}')

act_t_np = int(act_t.to_numpy())

print(f'Episode: {i}, Steps: {t}, act: {act_t_np}')

state_nt, reward, done, info = env.step(action=act_t_np)

targ_counter += 1

input_state_nt = QTensor([[state_nt]],dtype=kfloat32)

act_nt = QTensor.argmax(model(input_state_nt)+bias)

act_nt_np = int(act_nt.to_numpy())

memory[i, t] = (state_t, act_t, reward, state_nt, done)

if len(memory) >= BATCHSIZE:

# print('Optimizing...')

sampled_vs = [memory[k] for k in random.sample(list(memory), BATCHSIZE)]

target_temp = []

for s in sampled_vs:

if s[DONE]:

target_temp.append(QTensor(s[REWARD]).reshape([1, -1]))

else:

input_s = QTensor([[s[STATE_NT]]],dtype=kfloat32)

out_temp = s[REWARD] + GAMMA * tensor.max(model(input_s) + bias_targ)

out_temp = out_temp.reshape([1, -1])

target_temp.append(out_temp)

target_out = []

for b in sampled_vs:

input_b = QTensor([[b[STATE_T]]], requires_grad=True,dtype=kfloat32)

out_result = model(input_b) + bias

index = int(b[ACTION].to_numpy())

out_result_temp = out_result[0][index].reshape([1, -1])

target_out.append(out_result_temp)

opt.zero_grad()

target_label = tensor.concatenate(target_temp, 1)

output = tensor.concatenate(target_out, 1)

loss = loss_func(target_label, output)

loss.backward()

opt.step()

# update parameters in target circuit

if targ_counter == TARGET_MAX:

param_targ = param.copy().reshape([1, -1]).to_numpy()

bias_targ = bias.copy()

targ_counter = 0

state_t, act_t_np = state_nt, act_nt_np

if done:

print("reward", reward)

if reward == 1.0:

frames.append(env.render(mode='rgb_array'))

display_frames_as_gif(frames, i)

exit()

break

end = time.time()

"""

# Episode: 0, Steps: 0, act: 0

# Episode: 0, Steps: 1, act: 0

# Episode: 0, Steps: 2, act: 0

# Episode: 0, Steps: 3, act: 0

# Episode: 0, Steps: 4, act: 0

# Episode: 0, Steps: 5, act: 3

# Episode: 0, Steps: 6, act: 0

# Episode: 0, Steps: 7, act: 0

# Episode: 0, Steps: 8, act: 1

# Episode: 0, Steps: 9, act: 3

# Episode: 0, Steps: 10, act: 0

# Episode: 0, Steps: 11, act: 1

# Episode: 0, Steps: 12, act: 2

# reward 0.0

# Episode: 1, Steps: 0, act: 3

# Episode: 1, Steps: 1, act: 1

# Episode: 1, Steps: 2, act: 0

# Episode: 1, Steps: 3, act: 0

# Episode: 1, Steps: 4, act: 2

# reward 0.0

# Episode: 2, Steps: 0, act: 0

# Episode: 2, Steps: 1, act: 2

# Episode: 2, Steps: 2, act: 2

# Episode: 2, Steps: 3, act: 1

# Episode: 2, Steps: 4, act: 3

# Episode: 2, Steps: 5, act: 1

# Episode: 2, Steps: 6, act: 1

# Episode: 2, Steps: 7, act: 3

# Episode: 2, Steps: 8, act: 2

# reward 0.0

# Episode: 3, Steps: 0, act: 0

# Episode: 3, Steps: 1, act: 0

# Episode: 3, Steps: 2, act: 0

# Episode: 3, Steps: 3, act: 1

# Episode: 3, Steps: 4, act: 1

# Episode: 3, Steps: 5, act: 2

# Episode: 3, Steps: 6, act: 3

# reward 0.0

# Episode: 4, Steps: 0, act: 0

# Episode: 4, Steps: 1, act: 0

# Episode: 4, Steps: 2, act: 1

# Episode: 4, Steps: 3, act: 0

# Episode: 4, Steps: 4, act: 2

# reward 0.0

# Episode: 5, Steps: 0, act: 3

# Episode: 5, Steps: 1, act: 1

# Episode: 5, Steps: 2, act: 1

# Episode: 5, Steps: 3, act: 0

# Episode: 5, Steps: 4, act: 1

# reward 0.0

# Episode: 6, Steps: 0, act: 2

# Episode: 6, Steps: 1, act: 3

# Episode: 6, Steps: 2, act: 3

# Episode: 6, Steps: 3, act: 0

# Episode: 6, Steps: 4, act: 0

# Episode: 6, Steps: 5, act: 1

# Episode: 6, Steps: 6, act: 1

# Episode: 6, Steps: 7, act: 2

# Episode: 6, Steps: 8, act: 3

# reward 0.0

# Episode: 7, Steps: 0, act: 2

# Episode: 7, Steps: 1, act: 1

# reward 0.0

# Episode: 8, Steps: 0, act: 0

# Episode: 8, Steps: 1, act: 2

# Episode: 8, Steps: 2, act: 1

# reward 0.0

# Episode: 9, Steps: 0, act: 0

# Episode: 9, Steps: 1, act: 0

# Episode: 9, Steps: 2, act: 0

# Episode: 9, Steps: 3, act: 0

# Episode: 9, Steps: 4, act: 3

# Episode: 9, Steps: 5, act: 2

# Episode: 9, Steps: 6, act: 3

# Episode: 9, Steps: 7, act: 0

# Episode: 9, Steps: 8, act: 0

# Episode: 9, Steps: 9, act: 1

# Episode: 9, Steps: 10, act: 0

# Episode: 9, Steps: 11, act: 1

# Episode: 9, Steps: 12, act: 3

# Episode: 9, Steps: 13, act: 0

# Episode: 9, Steps: 14, act: 0

# Episode: 9, Steps: 15, act: 0

# Episode: 9, Steps: 16, act: 2

# reward 0.0

# Episode: 10, Steps: 0, act: 0

# Episode: 10, Steps: 1, act: 0

# Episode: 10, Steps: 2, act: 0

# Episode: 10, Steps: 3, act: 1

# Episode: 10, Steps: 4, act: 2

# reward 0.0

# Episode: 11, Steps: 0, act: 0

# Episode: 11, Steps: 1, act: 0

# Episode: 11, Steps: 2, act: 1

# Episode: 11, Steps: 3, act: 0

# Episode: 11, Steps: 4, act: 0

# Episode: 11, Steps: 5, act: 2

# reward 0.0

# Episode: 12, Steps: 0, act: 0

# Episode: 12, Steps: 1, act: 0

# Episode: 12, Steps: 2, act: 3

# Episode: 12, Steps: 3, act: 0

# Episode: 12, Steps: 4, act: 0

# Episode: 12, Steps: 5, act: 0

# Episode: 12, Steps: 6, act: 3

# Episode: 12, Steps: 7, act: 3

# Episode: 12, Steps: 8, act: 0

# Episode: 12, Steps: 9, act: 0

# Episode: 12, Steps: 10, act: 3

# Episode: 12, Steps: 11, act: 0

# Episode: 12, Steps: 12, act: 1

# Episode: 12, Steps: 13, act: 1

# Episode: 12, Steps: 14, act: 0

# Episode: 12, Steps: 15, act: 0

# Episode: 12, Steps: 16, act: 2

# Episode: 12, Steps: 17, act: 1

# Episode: 12, Steps: 18, act: 1

# Episode: 12, Steps: 19, act: 3

# Episode: 12, Steps: 20, act: 0

# Episode: 12, Steps: 21, act: 0

# Episode: 12, Steps: 22, act: 2

# Episode: 12, Steps: 23, act: 3

# reward 0.0

# Episode: 13, Steps: 0, act: 3

# Episode: 13, Steps: 1, act: 0

# Episode: 13, Steps: 2, act: 0

# Episode: 13, Steps: 3, act: 1

# Episode: 13, Steps: 4, act: 3

# Episode: 13, Steps: 5, act: 0

# Episode: 13, Steps: 6, act: 2

# Episode: 13, Steps: 7, act: 3

# Episode: 13, Steps: 8, act: 0

# Episode: 13, Steps: 9, act: 1

# Episode: 13, Steps: 10, act: 3

# Episode: 13, Steps: 11, act: 1

# Episode: 13, Steps: 12, act: 1

# Episode: 13, Steps: 13, act: 2

# Episode: 13, Steps: 14, act: 0

# Episode: 13, Steps: 15, act: 2

# Episode: 13, Steps: 16, act: 0

# Episode: 13, Steps: 17, act: 0

# Episode: 13, Steps: 18, act: 1

# reward 0.0

# Episode: 14, Steps: 0, act: 1

# Episode: 14, Steps: 1, act: 2

# reward 0.0

# Episode: 15, Steps: 0, act: 3

# Episode: 15, Steps: 1, act: 2

# Episode: 15, Steps: 2, act: 1

# reward 0.0

# Episode: 16, Steps: 0, act: 1

# Episode: 16, Steps: 1, act: 1

# Episode: 16, Steps: 2, act: 1

# reward 0.0

# Episode: 17, Steps: 0, act: 2

# Episode: 17, Steps: 1, act: 2

# Episode: 17, Steps: 2, act: 3

# Episode: 17, Steps: 3, act: 1

# Episode: 17, Steps: 4, act: 0

# reward 0.0

# Episode: 18, Steps: 0, act: 3

# Episode: 18, Steps: 1, act: 2

# Episode: 18, Steps: 2, act: 3

# Episode: 18, Steps: 3, act: 1

# reward 0.0

# Episode: 19, Steps: 0, act: 0

# Episode: 19, Steps: 1, act: 3

# Episode: 19, Steps: 2, act: 2

# Episode: 19, Steps: 3, act: 1

# reward 0.0

# Episode: 20, Steps: 0, act: 3

# Episode: 20, Steps: 1, act: 0

# Episode: 20, Steps: 2, act: 1

# Episode: 20, Steps: 3, act: 2

# reward 0.0

# Episode: 21, Steps: 0, act: 0

# Episode: 21, Steps: 1, act: 0

# Episode: 21, Steps: 2, act: 0

# Episode: 21, Steps: 3, act: 3

# Episode: 21, Steps: 4, act: 0

# Episode: 21, Steps: 5, act: 0

# Episode: 21, Steps: 6, act: 0

# Episode: 21, Steps: 7, act: 1

# Episode: 21, Steps: 8, act: 0

# Episode: 21, Steps: 9, act: 0

# Episode: 21, Steps: 10, act: 1

# Episode: 21, Steps: 11, act: 3

# Episode: 21, Steps: 12, act: 3

# Episode: 21, Steps: 13, act: 1

# Episode: 21, Steps: 14, act: 1

# Episode: 21, Steps: 15, act: 0

# Episode: 21, Steps: 16, act: 3

# Episode: 21, Steps: 17, act: 1

# Episode: 21, Steps: 18, act: 3

# Episode: 21, Steps: 19, act: 0

# Episode: 21, Steps: 20, act: 3

# Episode: 21, Steps: 21, act: 2

# Episode: 21, Steps: 22, act: 3

# Episode: 21, Steps: 23, act: 0

# Episode: 21, Steps: 24, act: 3

# Episode: 21, Steps: 25, act: 3

# Episode: 21, Steps: 26, act: 0

# Episode: 21, Steps: 27, act: 0

# Episode: 21, Steps: 28, act: 3

# Episode: 21, Steps: 29, act: 0

# Episode: 21, Steps: 30, act: 0

# Episode: 21, Steps: 31, act: 3

# Episode: 21, Steps: 32, act: 1

# Episode: 21, Steps: 33, act: 0

# Episode: 21, Steps: 34, act: 2

# reward 0.0

# Episode: 22, Steps: 0, act: 3

# Episode: 22, Steps: 1, act: 0

# Episode: 22, Steps: 2, act: 3

# Episode: 22, Steps: 3, act: 3

# Episode: 22, Steps: 4, act: 3

# Episode: 22, Steps: 5, act: 1

# Episode: 22, Steps: 6, act: 2

# reward 0.0

# Episode: 23, Steps: 0, act: 0

# Episode: 23, Steps: 1, act: 0

# Episode: 23, Steps: 2, act: 0

# Episode: 23, Steps: 3, act: 2

# Episode: 23, Steps: 4, act: 3

# Episode: 23, Steps: 5, act: 2

# Episode: 23, Steps: 6, act: 0

# Episode: 23, Steps: 7, act: 0

# Episode: 23, Steps: 8, act: 0

# Episode: 23, Steps: 9, act: 1

# Episode: 23, Steps: 10, act: 3

# Episode: 23, Steps: 11, act: 0

# Episode: 23, Steps: 12, act: 2

# Episode: 23, Steps: 13, act: 1

# reward 0.0

# Episode: 24, Steps: 0, act: 0

# Episode: 24, Steps: 1, act: 3

# Episode: 24, Steps: 2, act: 1

# Episode: 24, Steps: 3, act: 1

# Episode: 24, Steps: 4, act: 0

# Episode: 24, Steps: 5, act: 2

# Episode: 24, Steps: 6, act: 2

# Episode: 24, Steps: 7, act: 1

# Episode: 24, Steps: 8, act: 1

# Episode: 24, Steps: 9, act: 1

# Episode: 24, Steps: 10, act: 2

# reward 1.0

"""

Example of quantum classical transfer learning¶

A machine learning method called transfer learning can be applied to image classifiers based on hybrid classical quantum networks. Based on VQNet’s pyvqnet.qnn.vqc interface, we implement the following code example.

Transfer learning is a well-established artificial neural network training technique based on the general intuition that if a pre-trained network is good at solving a given problem, then, with just some additional training, it can also be used to solve a different but related problem.

Below, we first use a classical neural network CNN to train a classification model, then freeze some layer parameters, and add a variational quantum circuit to form a quantum classical hybrid neural network for transfer learning model training.

import os

import os.path

import gzip

import struct

import numpy as np

import sys

sys.path.insert(0,"../")

from pyvqnet.nn.module import Module

from pyvqnet.nn.linear import Linear

from pyvqnet.nn.conv import Conv2D

from pyvqnet.utils.storage import load_parameters, save_parameters

from pyvqnet.nn import activation as F

from pyvqnet.nn.pooling import MaxPool2D

from pyvqnet.nn.loss import SoftmaxCrossEntropy

from pyvqnet.optim.sgd import SGD

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.qnn.vqc import hadamard,QMachine,QModule,ry,cnot,MeasureAll

from pyvqnet.nn import Parameter

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

train_size = 50

eval_size = 2

EPOCHES = 3

n_qubits = 4 # Number of qubits

q_depth = 6 # Depth of the quantum circuit (number of variational layers)

def q_h_vqc(qm, qubits):

nq = len(qubits)

for idx in range(nq):

hadamard(qm,qubits[idx])# to get shape of (batch,1) for ry

def q_ry_embed_vqc(qm,param,qubits):

nq = len(qubits)

for idx in range(nq):

ry(qm,idx,param[:,[idx]])

def q_ry_param_vqc(qm,param,qubits):

nq = len(qubits)

for idx in range(nq):

ry(qm,idx,param[idx])

def q_entangling_vqc(qm,qubits):

nqubits = len(qubits)

for i in range(0, nqubits - 1,2): # Loop over even indices: i=0,2,...N-2

cnot(qm,[qubits[i], qubits[i + 1]])

for i in range(1, nqubits - 1,

2): # Loop over odd indices: i=1,3,...N-3

cnot(qm,[qubits[i], qubits[i + 1]])

def vqc_quantum_net(qm,q_input_features, q_weights_flat, qubits):

q_weights = q_weights_flat.reshape([q_depth, n_qubits])

q_h_vqc(qm,qubits)

q_ry_embed_vqc(qm,q_input_features,qubits)

for k in range(q_depth):

q_entangling_vqc(qm,qubits)

q_ry_param_vqc(qm, q_weights[k],qubits)

class QNet(QModule):

def __init__(self,nq):

super(QNet,self).__init__()

self.qm = QMachine(nq)

self.nq =nq

self.w = Parameter((q_depth * n_qubits,))

pauli_str_list =[]

for position in range(nq):

pauli_str = {"Z" + str(position): 1.0}

pauli_str_list.append(pauli_str)

self.ma = MeasureAll(obs=pauli_str_list)

def forward(self,x):

self.qm.reset_states(x.shape[0])#you have to expand states to input batchsize!

vqc_quantum_net(self.qm, x, self.w, range(self.nq))

return self.ma(self.qm)

The following is the code for loading data:

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download dataset

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

def load_mnist(dataset="training_data",

digits=np.arange(2),

path="examples"):

"""

Load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")