Quantum Machine Learning Using Qpanda2¶

We use VQNet and pyQPanda2 to implement multiple quantum machine learning examples.

警告

The quantum computing part of the following interface uses pyqpanda2 https://pyqpanda-toturial.readthedocs.io/zh/latest/.

Due to the compatibility issues between pyqpanda2 and pyqpanda3, you need to install pyqpnda2 yourself, pip install pyqpanda

Application of Parameterized Quantum Circuit in Classification Task¶

1. QVC demo¶

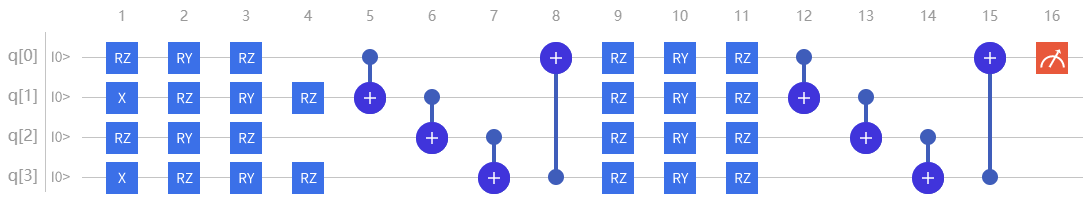

This example uses VQNet to implement the algorithm in the thesis: Circuit-centric quantum classifiers . This example is used to determine whether a binary number is odd or even. By encoding the binary number onto the qubit and optimizing the variable parameters in the circuit, the z-direction observation of the circuit can indicate whether the input is odd or even.

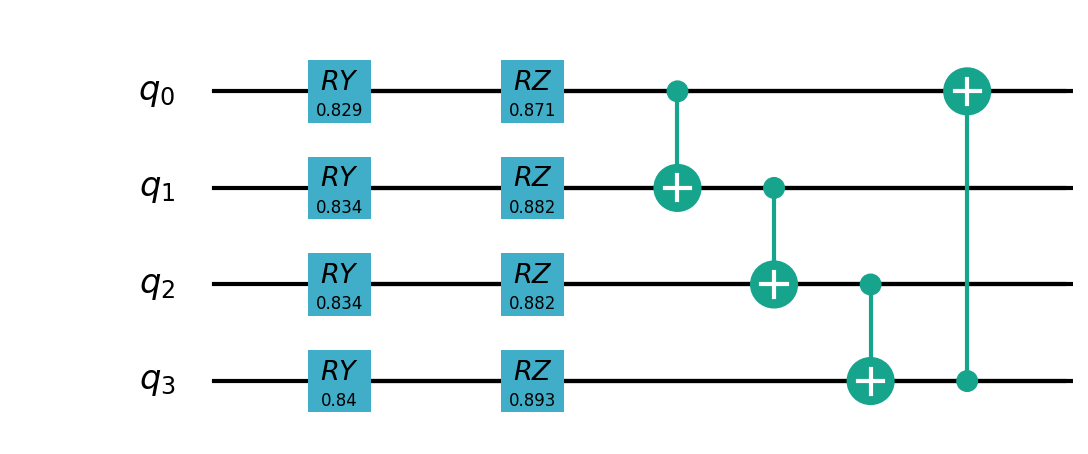

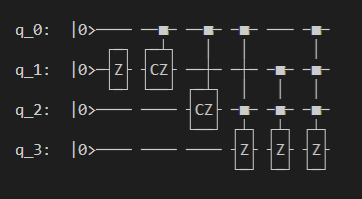

Quantum circuit¶

The variable component sub-circuit usually defines a sub-circuit, which is a basic circuit architecture, and complex variational circuits can be constructed by repeating layers.

Our circuit layer consists of multiple rotating quantum logic gates and CNOT quantum logic gates that entangle each qubit with its neighboring qubits.

We also need a circuit to encode classical data into a quantum state, so that the output of the circuit measurement is related to the input.

In this example, we encode the binary input onto the qubits in the corresponding order. For example, the input data 1101 is encoded into 4 qubits.

import pyqpanda as pq

def qvc_circuits(input,weights,qlist,clist,machine):

def get_cnot(nqubits):

cir = pq.QCircuit()

for i in range(len(nqubits)-1):

cir.insert(pq.CNOT(nqubits[i],nqubits[i+1]))

cir.insert(pq.CNOT(nqubits[len(nqubits)-1],nqubits[0]))

return cir

def build_circult(weights, xx, nqubits):

def Rot(weights_j, qubits):

circult = pq.QCircuit()

circult.insert(pq.RZ(qubits, weights_j[0]))

circult.insert(pq.RY(qubits, weights_j[1]))

circult.insert(pq.RZ(qubits, weights_j[2]))

return circult

def basisstate():

circult = pq.QCircuit()

for i in range(len(nqubits)):

if xx[i] == 1:

circult.insert(pq.X(nqubits[i]))

return circult

circult = pq.QCircuit()

circult.insert(basisstate())

for i in range(weights.shape[0]):

weights_i = weights[i,:,:]

for j in range(len(nqubits)):

weights_j = weights_i[j]

circult.insert(Rot(weights_j,nqubits[j]))

cnots = get_cnot(nqubits)

circult.insert(cnots)

circult.insert(pq.Z(nqubits[0]))

prog = pq.QProg()

prog.insert(circult)

return prog

weights = weights.reshape([2,4,3])

prog = build_circult(weights,input,qlist)

prob = machine.prob_run_dict(prog, qlist[0], -1)

prob = list(prob.values())

return prob

Model building¶

We have defined variable quantum circuits qvc_circuits .

We hope to use it in our VQNet’s automatic differentiation framework,

to take advantage of VQNet’s optimization fucntions for model training.

We define a Model class, which inherits from the abstract class Module.

The model uses the QuantumLayer class, which is a quantum computing layer that can be automatically differentiated.

qvc_circuits is the quantum circuit we want to run,

24 is the number of all quantum circuit parameters that need to be trained,

“cpu” means that pyQPanda2’s full amplitude simulator is used here,

and 4 means that 4 qubits need to be applied for.

In the forward() function, the user defines the logic of the model to run forward.

from pyvqnet.nn.module import Module

from pyvqnet.optim.sgd import SGD

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.data import data_generator as dataloader

import pyqpanda as pq

from pyvqnet.qnn.quantumlayer import QuantumLayer

from pyqpanda import *

class Model(Module):

def __init__(self):

super(Model, self).__init__()

self.qvc = QuantumLayer(qvc_circuits,24,"cpu",4)

def forward(self, x):

return self.qvc(x)

Model training and testing¶

We use pre-generated random binary numbers and their odd and even labels. The data as follows.

import numpy as np

import os

qvc_train_data = [0,1,0,0,1,

0, 1, 0, 1, 0,

0, 1, 1, 0, 0,

0, 1, 1, 1, 1,

1, 0, 0, 0, 1,

1, 0, 0, 1, 0,

1, 0, 1, 0, 0,

1, 0, 1, 1, 1,

1, 1, 0, 0, 0,

1, 1, 0, 1, 1,

1, 1, 1, 0, 1,

1, 1, 1, 1, 0]

qvc_test_data= [0, 0, 0, 0, 0,

0, 0, 0, 1, 1,

0, 0, 1, 0, 1,

0, 0, 1, 1, 0]

def get_data(dataset_str):

if dataset_str == "train":

datasets = np.array(qvc_train_data)

else:

datasets = np.array(qvc_test_data)

datasets = datasets.reshape([-1,5])

data = datasets[:,:-1]

label = datasets[:,-1].astype(int)

label = np.eye(2)[label].reshape(-1,2)

return data, label

Model forwarding, loss function calculation, reverse calculation, optimizer calculation can perform like the general neural network training mode,until the number of iterations reaches the preset value. The training data used is generated above, and the test data is qvc_test_data and train data is qvc_train_data.

def get_accuary(result,label):

result,label = np.array(result.data), np.array(label.data)

score = np.sum(np.argmax(result,axis=1)==np.argmax(label,1))

return score

model = Model()

optimizer = SGD(model.parameters(),lr =0.1)

batch_size = 3

epoch = 20

loss = CategoricalCrossEntropy()

model.train()

datas,labels = get_data("train")

for i in range(epoch):

count=0

sum_loss = 0

accuary = 0

t = 0

for data,label in dataloader(datas,labels,batch_size,False):

optimizer.zero_grad()

data,label = QTensor(data), QTensor(label)

result = model(data)

loss_b = loss(label,result)

loss_b.backward()

optimizer._step()

sum_loss += loss_b.item()

count+=batch_size

accuary += get_accuary(result,label)

t = t + 1

print(f"epoch:{i}, #### loss:{sum_loss/count} #####accuray:{accuary/count}")

model.eval()

count = 0

test_data,test_label = get_data("test")

test_batch_size = 1

accuary = 0

sum_loss = 0

for testd,testl in dataloader(test_data,test_label,test_batch_size):

testd = QTensor(testd)

test_result = model(testd)

test_loss = loss(testl,test_result)

sum_loss += test_loss

count+=test_batch_size

accuary += get_accuary(test_result,testl)

print(f"test:--------------->loss:{sum_loss/count} #####accuray:{accuary/count}")

epoch:0, #### loss:0.20194714764753977 #####accuray:0.6666666666666666

epoch:1, #### loss:0.19724808633327484 #####accuray:0.8333333333333334

epoch:2, #### loss:0.19266503552595773 #####accuray:1.0

epoch:3, #### loss:0.18812804917494455 #####accuray:1.0

epoch:4, #### loss:0.1835678368806839 #####accuray:1.0

epoch:5, #### loss:0.1789149840672811 #####accuray:1.0

epoch:6, #### loss:0.17410411685705185 #####accuray:1.0

epoch:7, #### loss:0.16908332953850427 #####accuray:1.0

epoch:8, #### loss:0.16382796317338943 #####accuray:1.0

epoch:9, #### loss:0.15835540741682053 #####accuray:1.0

epoch:10, #### loss:0.15273457020521164 #####accuray:1.0

epoch:11, #### loss:0.14708336691061655 #####accuray:1.0

epoch:12, #### loss:0.14155150949954987 #####accuray:1.0

epoch:13, #### loss:0.1362930883963903 #####accuray:1.0

epoch:14, #### loss:0.1314386005202929 #####accuray:1.0

epoch:15, #### loss:0.12707658857107162 #####accuray:1.0

epoch:16, #### loss:0.123248390853405 #####accuray:1.0

epoch:17, #### loss:0.11995399743318558 #####accuray:1.0

epoch:18, #### loss:0.1171633576353391 #####accuray:1.0

epoch:19, #### loss:0.11482855677604675 #####accuray:1.0

[0.3412148654]

test:--------------->loss:QTensor(0.3412148654, requires_grad=True) #####accuray:1.0

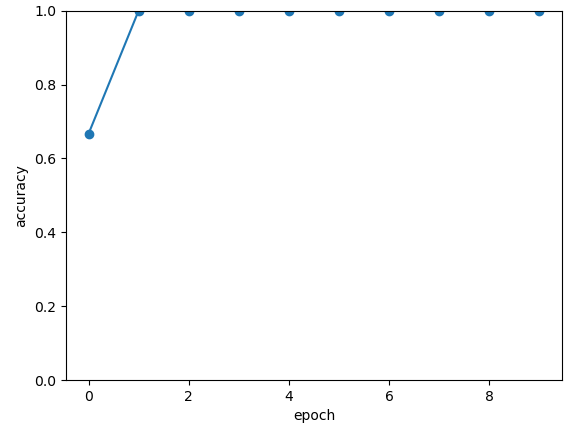

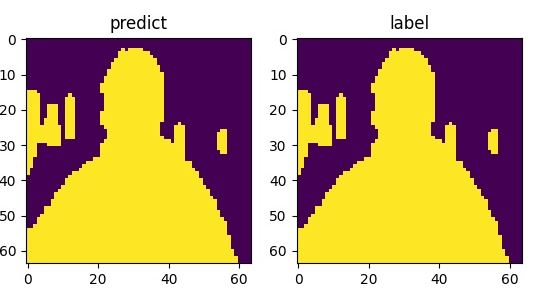

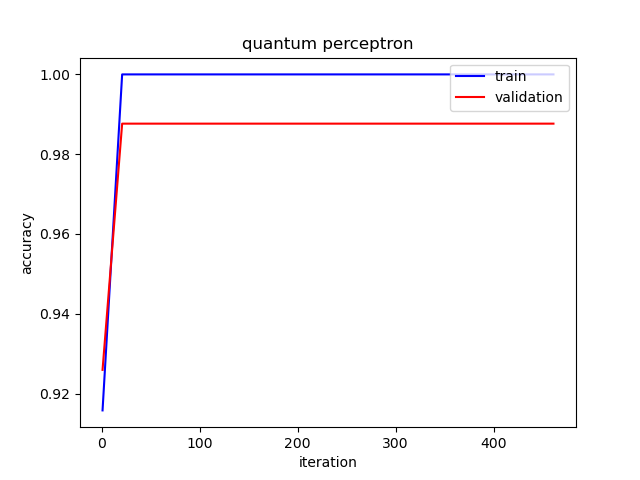

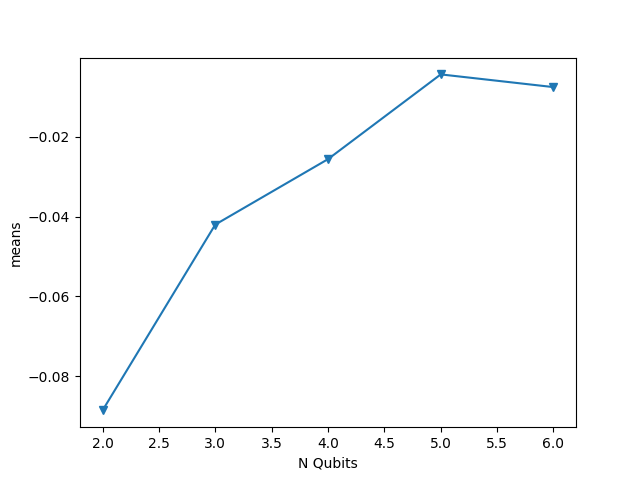

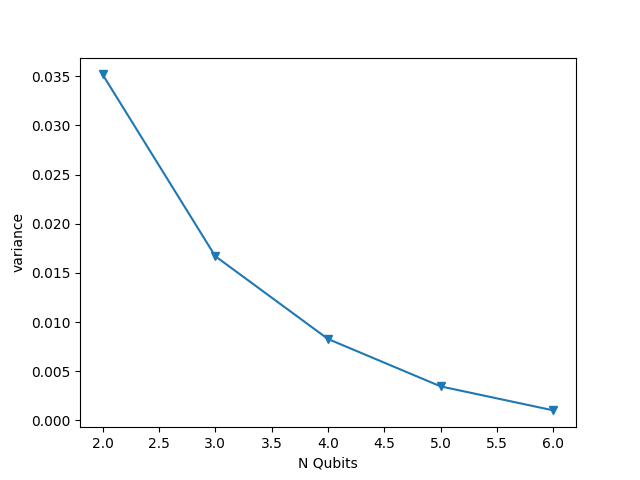

The following picture illustrates the curve of model’s accuracy:

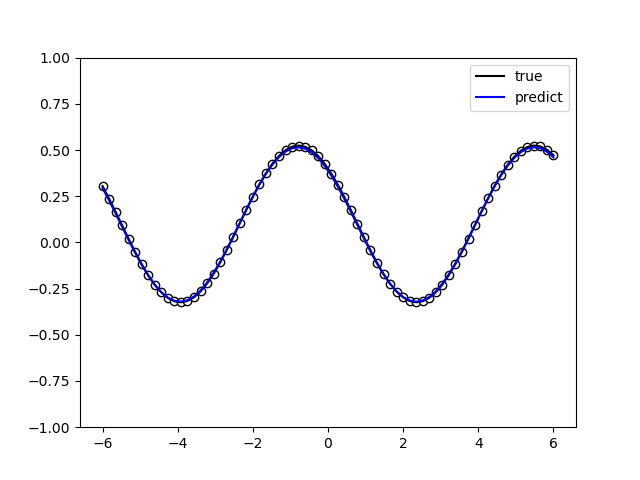

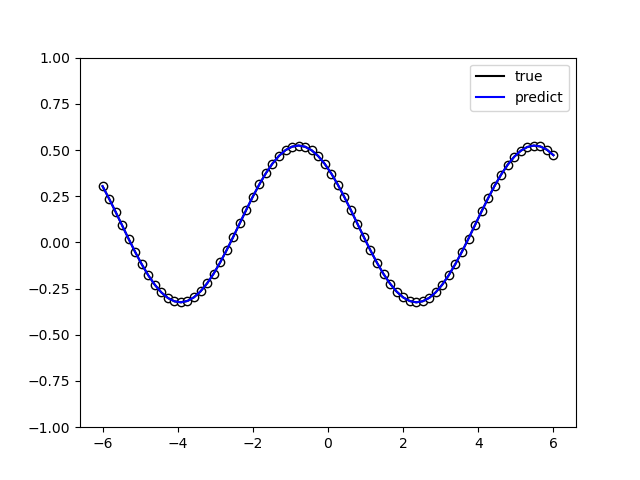

2. data re-uploading algorithm¶

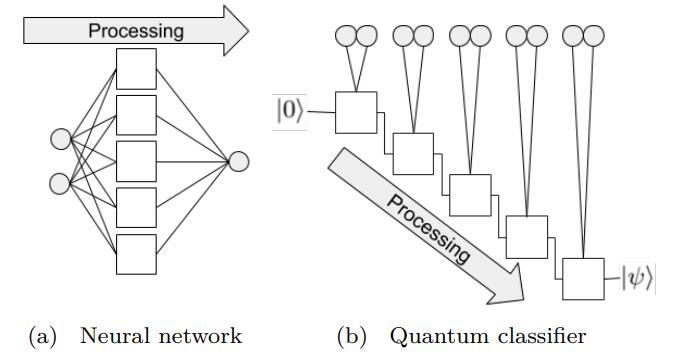

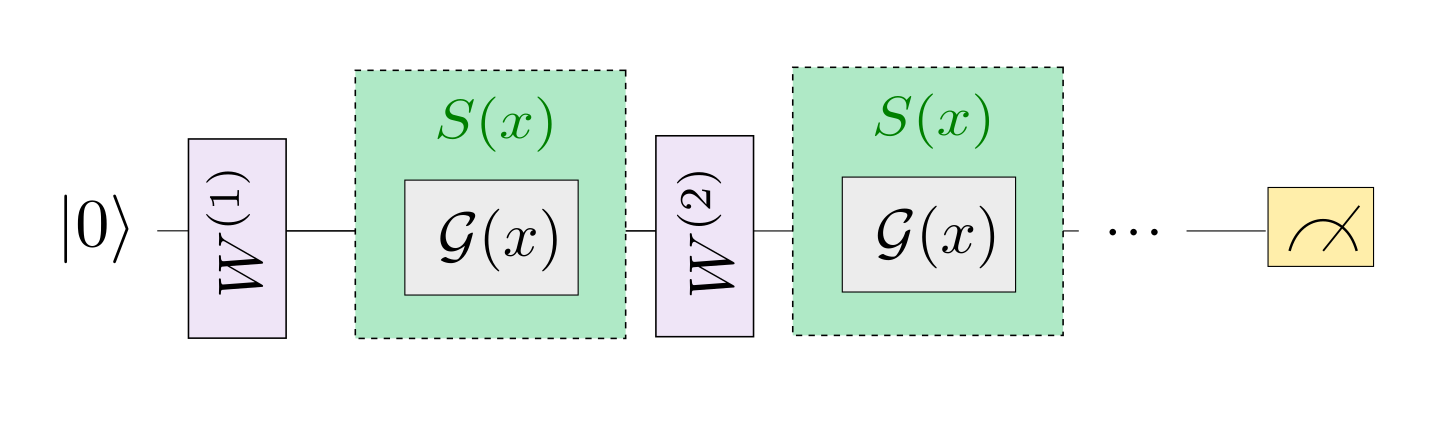

In a neural network, each neuron receives information from all neurons in the upper layer (Figure a). In contrast, the single-bit quantum classifier accepts the previous information processing unit and input (Figure b). For traditional quantum circuits, when the data is uploaded, the result can be obtained directly through several unitary transformations \(U(\theta_1,\theta_2,\theta_3)\).However, in the Quantum Data Re upLoading (QDRL) task, the data needs to be re-uploaded before every unitary transformation.

Comparison of QDRL and classic neural network schematics

import sys

sys.path.insert(0, "../")

import numpy as np

from pyvqnet.nn.linear import Linear

from pyvqnet.qnn.qdrl.vqnet_model import vmodel

from pyvqnet.optim import sgd

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.nn.module import Module

import matplotlib.pyplot as plt

import matplotlib

from pyvqnet.data import data_generator as get_minibatch_data

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

np.random.seed(42)

num_layers = 3

params = np.random.uniform(size=(num_layers, 3))

class Model(Module):

def __init__(self):

super(Model, self).__init__()

self.pqc = vmodel(params.shape)

self.fc2 = Linear(2, 2)

def forward(self, x):

x = self.pqc(x)

return x

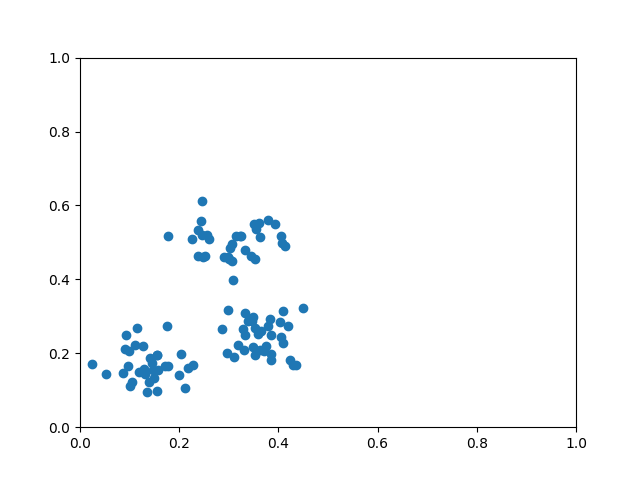

def circle(samples: int, reps=np.sqrt(1 / 2)):

data_x, data_y = [], []

for _ in range(samples):

x = np.random.rand(2)

y = [0, 1]

if np.linalg.norm(x) < reps:

y = [1, 0]

data_x.append(x)

data_y.append(y)

return np.array(data_x), np.array(data_y)

def plot_data(x, y, fig=None, ax=None):

if fig is None:

fig, ax = plt.subplots(1, 1, figsize=(5, 5))

reds = y == 0

blues = y == 1

ax.scatter(x[reds, 0], x[reds, 1], c="red", s=20, edgecolor="k")

ax.scatter(x[blues, 0], x[blues, 1], c="blue", s=20, edgecolor="k")

ax.set_xlabel("$x_1$")

ax.set_ylabel("$x_2$")

def get_score(pred, label):

pred, label = np.array(pred.data), np.array(label.data)

score = np.sum(np.argmax(pred, axis=1) == np.argmax(label, 1))

return score

model = Model()

optimizer = sgd.SGD(model.parameters(), lr=1)

def train():

"""

Main function for train qdrl model

"""

batch_size = 5

model.train()

x_train, y_train = circle(500)

x_train = np.hstack((x_train, np.ones((x_train.shape[0], 1)))) # 500*3

epoch = 10

print("start training...........")

for i in range(epoch):

accuracy = 0

count = 0

loss = 0

for data, label in get_minibatch_data(x_train, y_train, batch_size):

optimizer.zero_grad()

data, label = QTensor(data), QTensor(label)

output = model(data)

loss_fun = CategoricalCrossEntropy()

losss = loss_fun(label, output)

losss.backward()

optimizer._step()

accuracy += get_score(output, label)

loss += losss.item()

count += batch_size

print(f"epoch:{i}, train_accuracy_for_each_batch:{accuracy/count}")

print(f"epoch:{i}, train_loss_for_each_batch:{loss/count}")

def test():

batch_size = 5

model.eval()

print("start eval...................")

x_test, y_test = circle(500)

test_accuracy = 0

count = 0

x_test = np.hstack((x_test, np.ones((x_test.shape[0], 1))))

for test_data, test_label in get_minibatch_data(x_test, y_test,

batch_size):

test_data, test_label = QTensor(test_data), QTensor(test_label)

output = model(test_data)

test_accuracy += get_score(output, test_label)

count += batch_size

print(f"test_accuracy:{test_accuracy/count}")

if __name__ == "__main__":

train()

test()

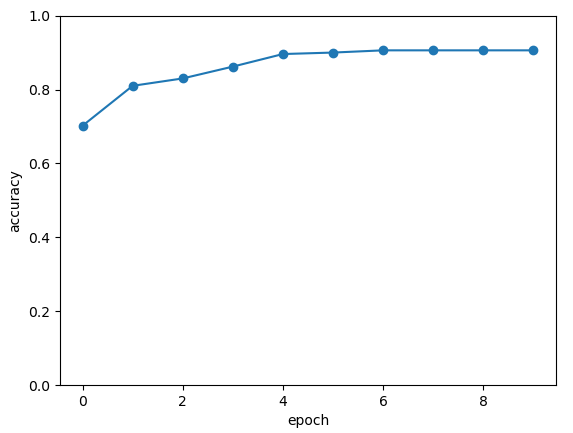

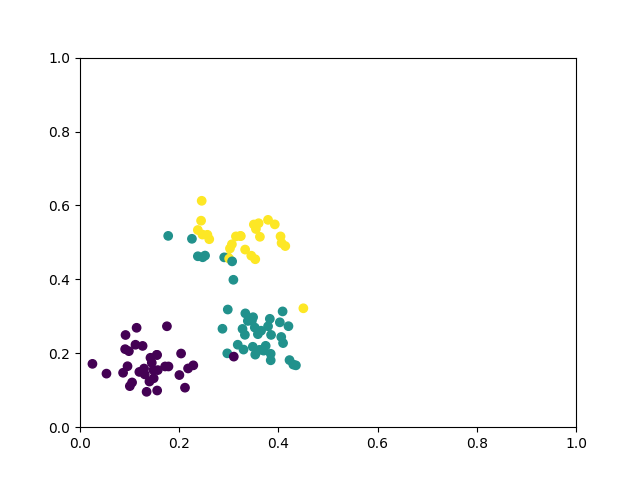

The following picture illustrates the curve of model’s accuracy:

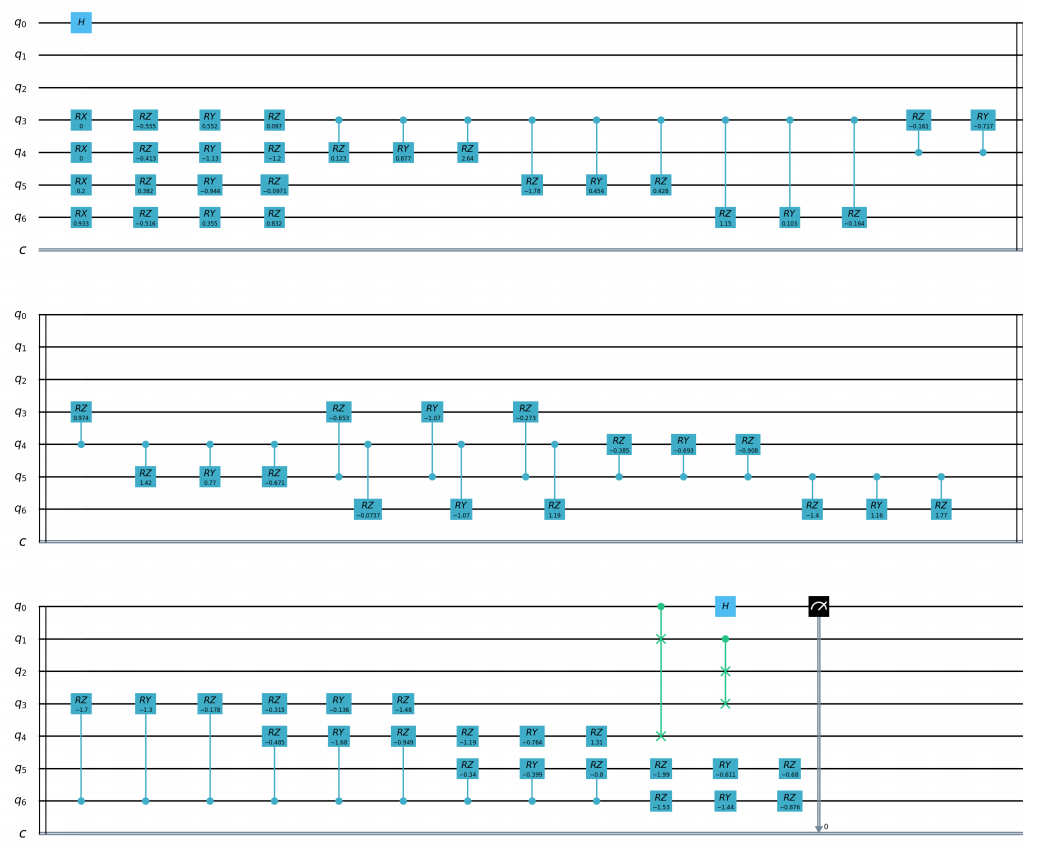

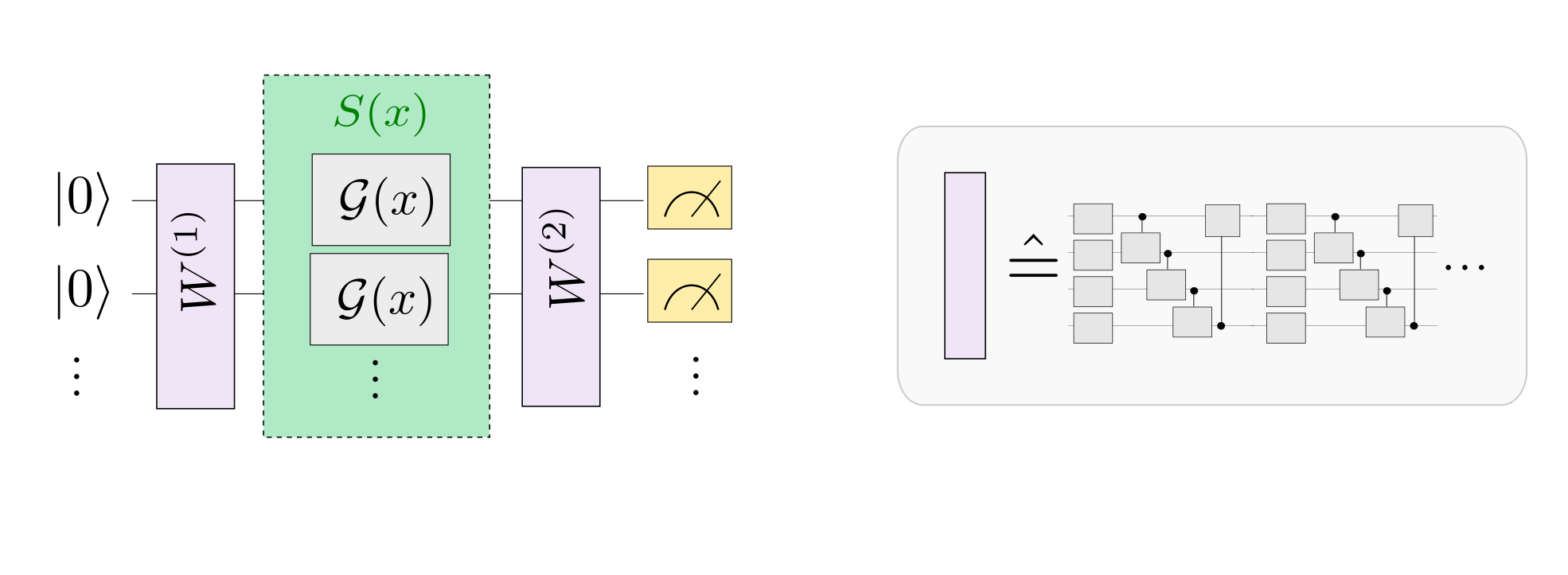

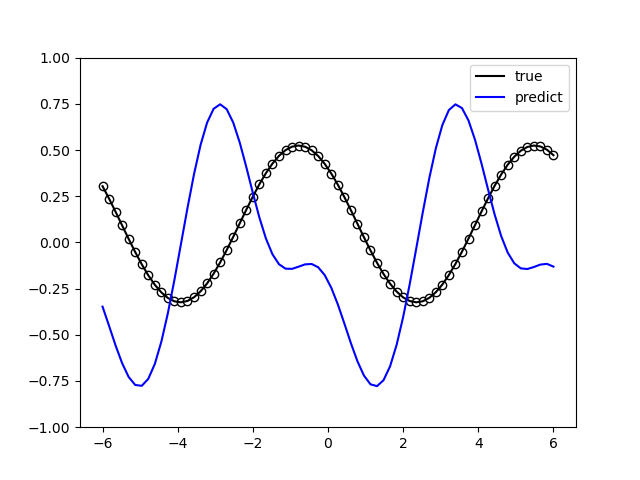

3. VSQL: Variational Shadow Quantum Learning for Classification Model¶

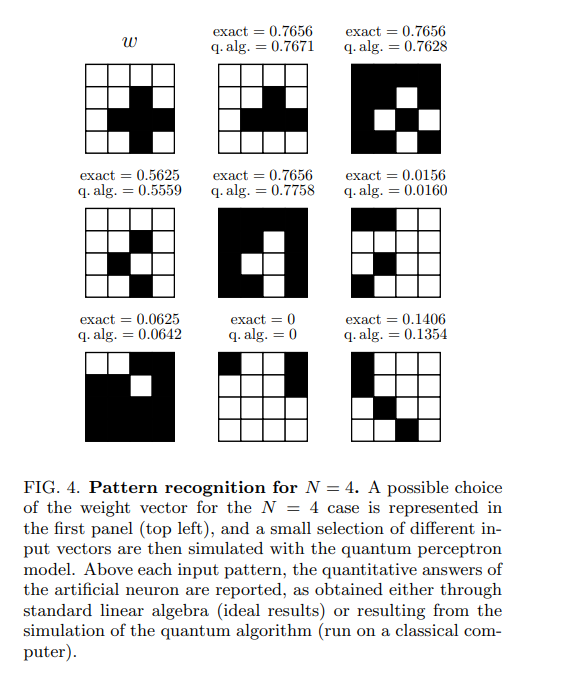

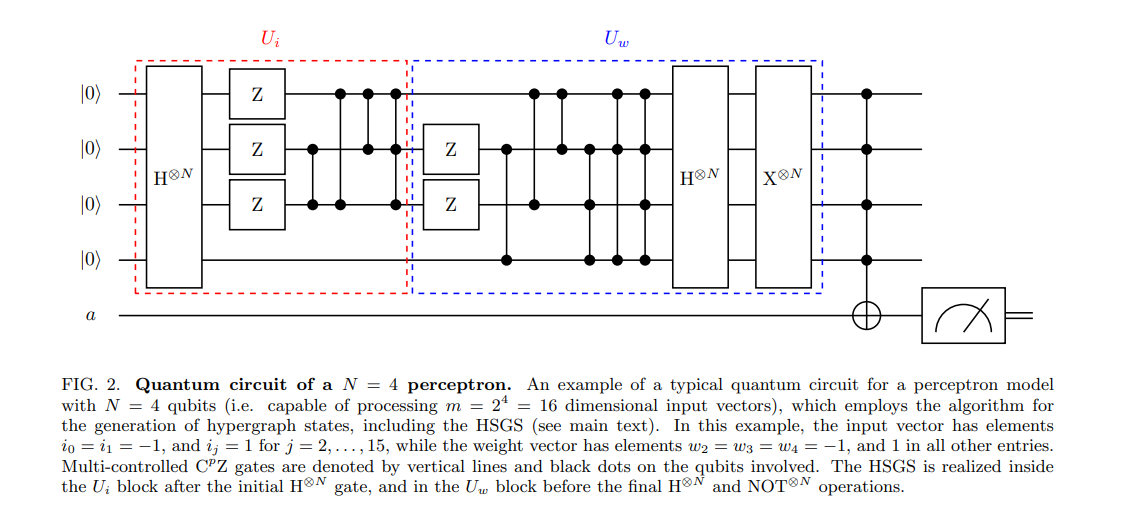

Using variable quantum circuits to construct a two-class classification model, comparing the classification accuracy with a neural network with similar parameter accuracy, the accuracy of the two is similar. The quantity of parameters of quantum circuits is much smaller than that of classical neural networks. The algorithm is based on the paper: Variational Shadow Quantum Learning for Classification Model to reproduce.

Following figure shows the architecture of VSQL algorithm:

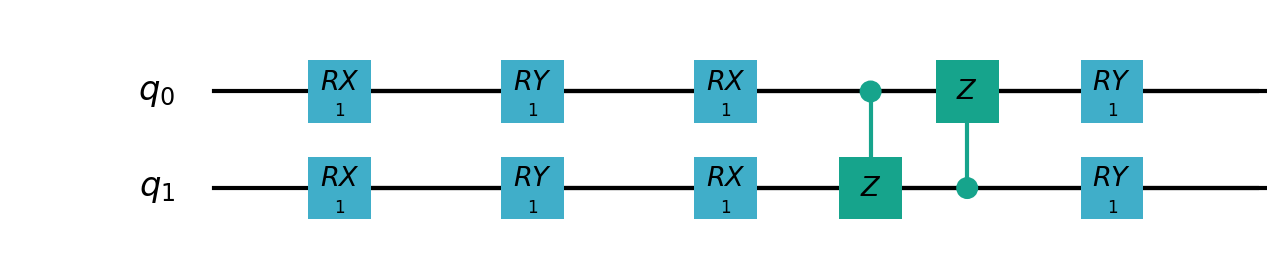

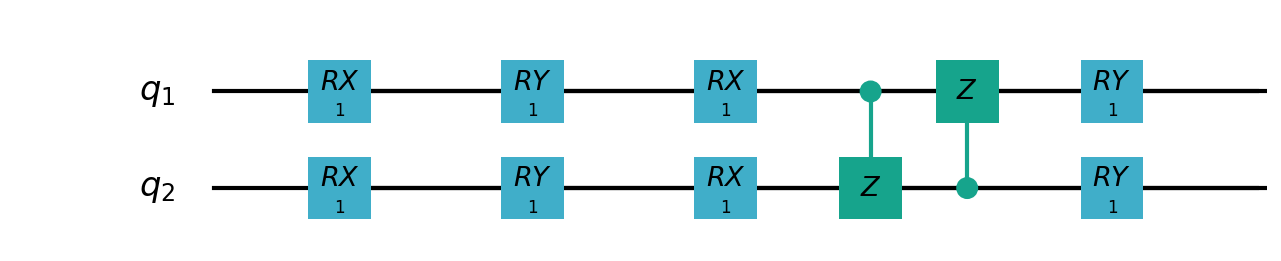

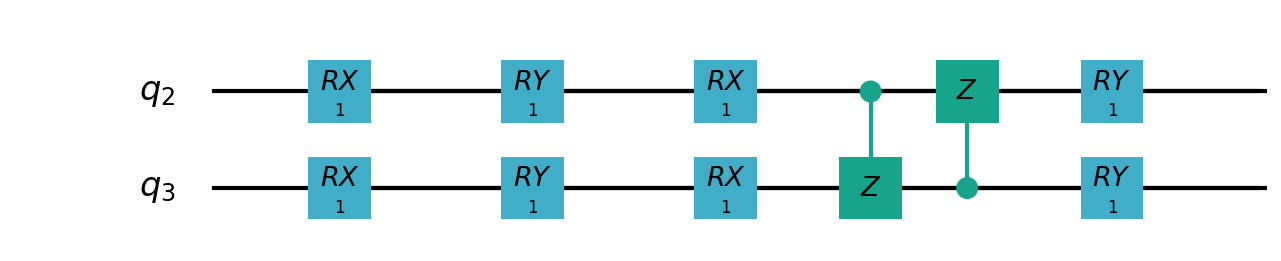

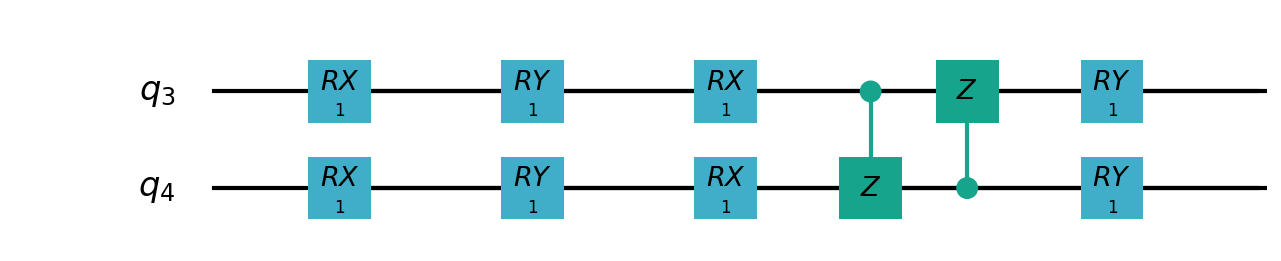

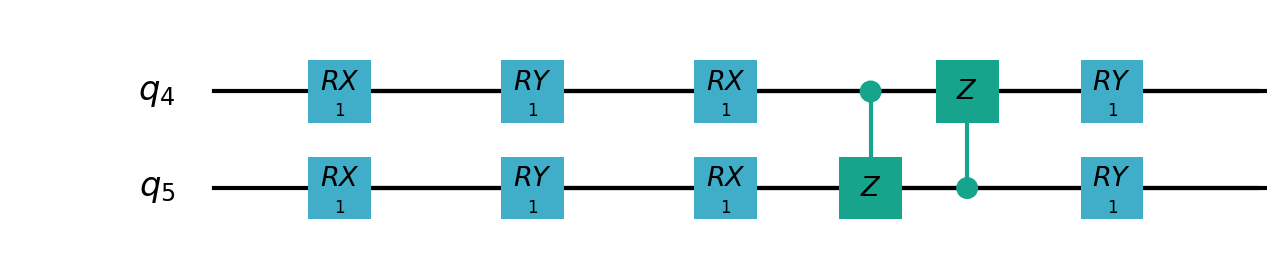

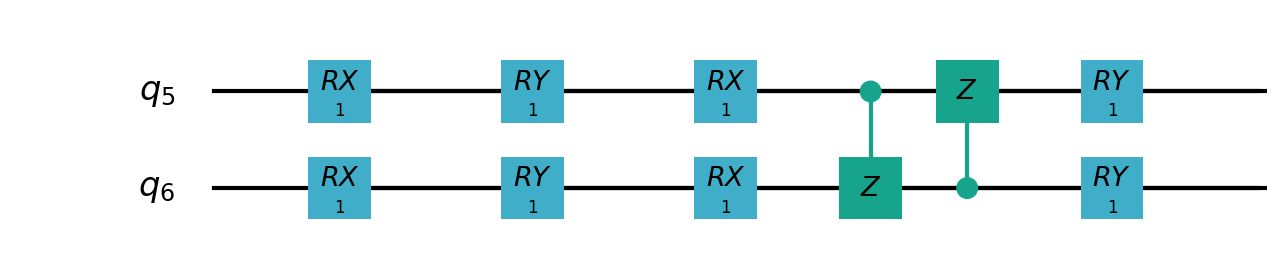

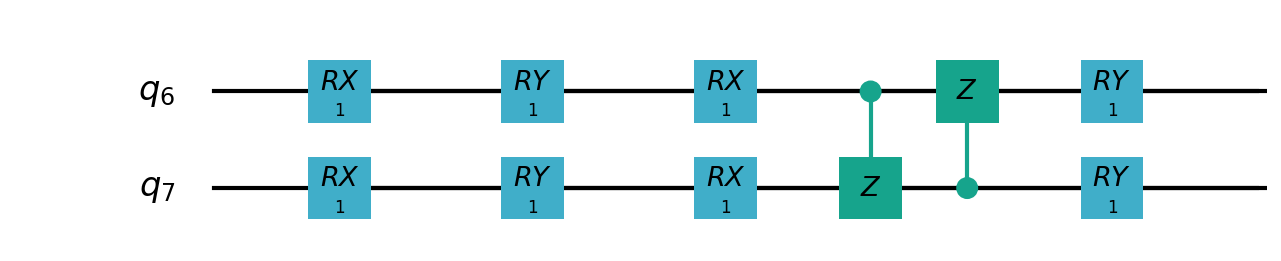

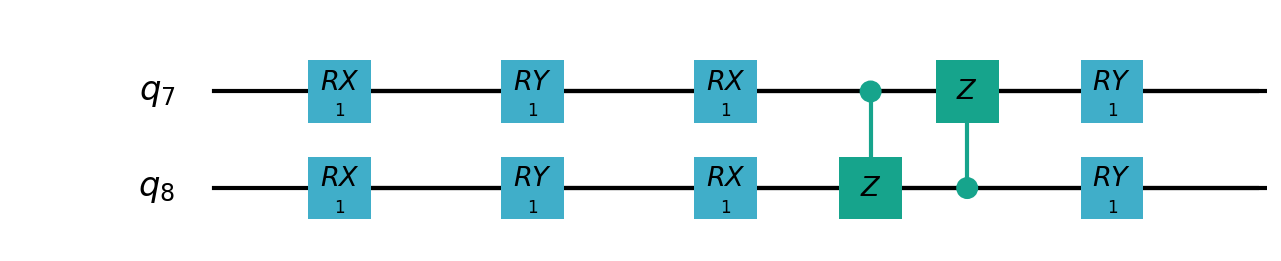

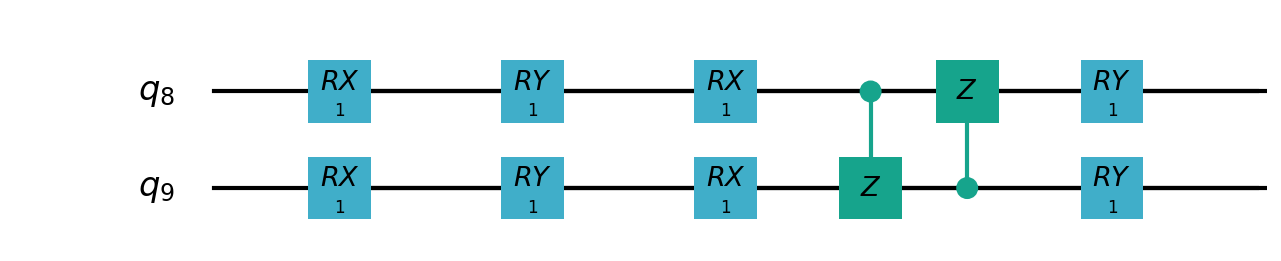

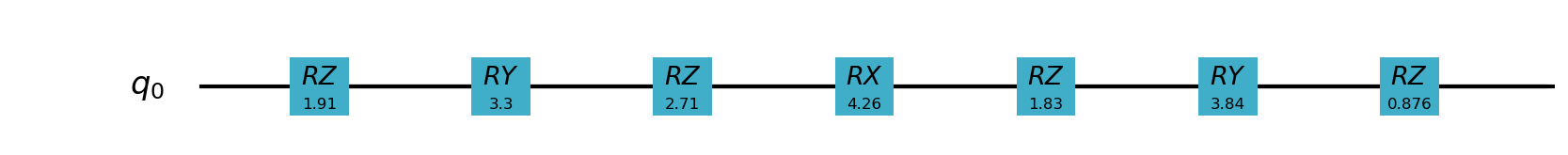

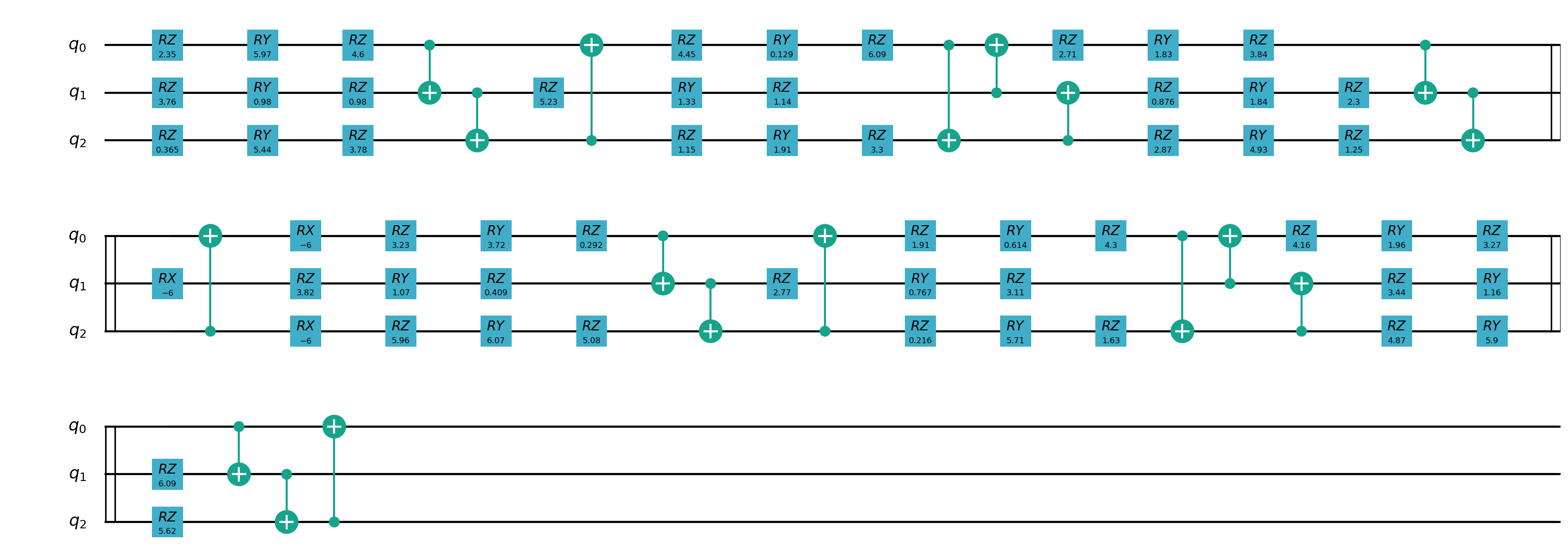

Following figures show the local quantum circuits structure on each qubits:

import sys

sys.path.insert(0, "../")

import os

import os.path

import struct

import gzip

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.qnn.measure import expval

from pyvqnet.qnn.quantumlayer import QuantumLayer

from pyvqnet.qnn.template import AmplitudeEmbeddingCircuit

from pyvqnet.nn.linear import Linear

import numpy as np

import pyqpanda as pq

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download function for mnist dataset file

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

def circuits_of_vsql(x, weights, qlist, clist, machine):

"""

VSQL model of quantum circuits

"""

weights = weights.reshape([depth + 1, 3, n_qsc])

def subcir(weights, qlist, depth, n_qsc, n_start):

cir = pq.QCircuit()

for i in range(n_qsc):

cir.insert(pq.RX(qlist[n_start + i], weights[0][0][i]))

cir.insert(pq.RY(qlist[n_start + i], weights[0][1][i]))

cir.insert(pq.RX(qlist[n_start + i], weights[0][2][i]))

for repeat in range(1, depth + 1):

for i in range(n_qsc - 1):

cir.insert(pq.CNOT(qlist[n_start + i], qlist[n_start + i + 1]))

cir.insert(pq.CNOT(qlist[n_start + n_qsc - 1], qlist[n_start]))

for i in range(n_qsc):

cir.insert(pq.RY(qlist[n_start + i], weights[repeat][1][i]))

return cir

def get_pauli_str(n_start, n_qsc):

pauli_str = ",".join("X" + str(i)

for i in range(n_start, n_start + n_qsc))

return {pauli_str: 1.0}

f_i = []

origin_in = AmplitudeEmbeddingCircuit(x, qlist)

for st in range(n - n_qsc + 1):

psd = get_pauli_str(st, n_qsc)

cir = pq.QCircuit()

cir.insert(origin_in)

cir.insert(subcir(weights, qlist, depth, n_qsc, st))

prog = pq.QProg()

prog.insert(cir)

f_ij = expval(machine, prog, psd, qlist)

f_i.append(f_ij)

f_i = np.array(f_i)

return f_i

#GLOBAL VAR

n = 10

n_qsc = 2

depth = 1

class QModel(Module):

"""

Model of VSQL

"""

def __init__(self):

super().__init__()

self.vq = QuantumLayer(circuits_of_vsql, (depth + 1) * 3 * n_qsc,

"cpu", 10)

self.fc = Linear(n - n_qsc + 1, 2)

def forward(self, x):

x = self.vq(x)

x = self.fc(x)

return x

class Model(Module):

def __init__(self):

super().__init__()

self.fc1 = Linear(input_channels=28 * 28, output_channels=2)

def forward(self, x):

x = tensor.flatten(x, 1)

x = self.fc1(x)

return x

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols), dtype=np.float32)

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def run_vsql():

"""

VQSL MODEL

"""

digits = [0, 1]

x_train, y_train = load_mnist("training_data", digits)

x_train = x_train / 255

y_train = y_train.reshape(-1, 1)

y_train = np.eye(len(digits))[y_train].reshape(-1, len(digits)).astype(

np.int64)

x_test, y_test = load_mnist("testing_data", digits)

x_test = x_test / 255

y_test = y_test.reshape(-1, 1)

y_test = np.eye(len(digits))[y_test].reshape(-1,

len(digits)).astype(np.int64)

x_train_list = []

x_test_list = []

for i in range(x_train.shape[0]):

x_train_list.append(

np.pad(x_train[i, :, :].flatten(), (0, 240),

constant_values=(0, 0)))

x_train = np.array(x_train_list)

for i in range(x_test.shape[0]):

x_test_list.append(

np.pad(x_test[i, :, :].flatten(), (0, 240),

constant_values=(0, 0)))

x_test = np.array(x_test_list)

x_train = x_train[:500]

y_train = y_train[:500]

x_test = x_test[:100]

y_test = y_test[:100]

print("model start")

model = QModel()

optimizer = Adam(model.parameters(), lr=0.1)

model.train()

result_file = open("./result/vqslrlt.txt", "w")

for epoch in range(1, 3):

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

correct = 0

for x, y in data_generator(x_train,

y_train,

batch_size=batch_size,

shuffle=True):

optimizer.zero_grad()

try:

x = x.reshape(batch_size, 1024)

except:

x = x.reshape(-1, 1024)

output = model(x)

cceloss = CategoricalCrossEntropy()

loss = cceloss(y, output)

loss.backward()

optimizer._step()

full_loss += loss.item()

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

print(f" n_loss {n_loss} Train Accuracy: {correct/n_loss} ")

print(f"Train Accuracy: {correct/n_loss} ")

print(f"Epoch: {epoch}, Loss: {full_loss / n_loss}")

result_file.write(f"{epoch}\t{full_loss / n_loss}\t{correct/n_loss}\t")

# Evaluation

model.eval()

print("eval")

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test,

y_test,

batch_size=batch_size,

shuffle=True):

x = x.reshape(1, 1024)

output = model(x)

cceloss = CategoricalCrossEntropy()

loss = cceloss(y, output)

full_loss += loss.item()

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

n_eval += 1

n_loss += 1

print(f"Eval Accuracy: {correct/n_eval}")

result_file.write(f"{full_loss / n_loss}\t{correct/n_eval}\n")

result_file.close()

del model

print("\ndone vqsl\n")

if __name__ == "__main__":

run_vsql()

The following shows the curve of model’s accuracy and loss:

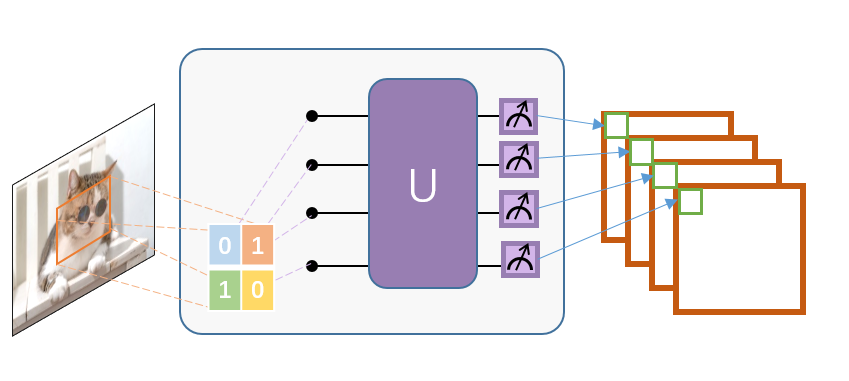

4.Quanvolution for image classification¶

In this example, we implement a Quantum Convolutional Neural Network, a type originally introduced in the paper Quanvolutional Neural Networks: Powering Image Recognition with Quantum Circuits method.

Similar to classic convolution, Quanvolution has the following steps:

A small region of the input image, in our case a 2×2 square of classical data, is embedded into the quantum circuit.

In this example, this is achieved by applying parameterized rotating logic gates to qubits initialized in the ground state. The convolutional kernel here generates variational circuits from stochastic circuits proposed in ref.

Finally, the quantum system is measured to obtain a list of classical expected values.

Similar to a classic convolutional layer, each expected value is mapped to a different channel of a single output pixel.

Repeating the same process over different regions, the complete input image can be scanned, producing an output object that will be constructed as a multi-channel image.

In order to perform classification tasks, this example uses the classic fully connected layer Linear to perform classification tasks after Quanvolution obtains the measurement values.

The main difference from classical convolution is that Quanvolution can generate highly complex kernels whose computation is at least in principle classically intractable.

Mnist dataset definition

import os

import os.path

import struct

import gzip

import sys

sys.path.insert(0, "../")

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import NLL_Loss

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.qnn.measure import expval

from pyvqnet.nn.linear import Linear

import numpy as np

from pyvqnet.qnn.qcnn import Quanvolution

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

"train_img": "train-images-idx3-ubyte.gz",

"train_label": "train-labels-idx1-ubyte.gz",

"test_img": "t10k-images-idx3-ubyte.gz",

"test_label": "t10k-labels-idx1-ubyte.gz"

}

def _download(dataset_dir, file_name):

"""

Download function for mnist dataset file

"""

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as file:

file_path_ungz = file_path[:-3].replace("\\", "/")

file_path_ungz = file_path_ungz.replace("-idx", ".idx")

if not os.path.exists(file_path_ungz):

open(file_path_ungz, "wb").write(file.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir, v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

def load_mnist(dataset="training_data", digits=np.arange(10), path="./"):

"""

load mnist data

"""

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, "train-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "train-labels.idx1-ubyte").replace(

"\\", "/")

elif dataset == "testing_data":

fname_image = os.path.join(path, "t10k-images.idx3-ubyte").replace(

"\\", "/")

fname_label = os.path.join(path, "t10k-labels.idx1-ubyte").replace(

"\\", "/")

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, "rb")

_, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, "rb")

_, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

num = len(ind)

images = np.zeros((num, rows, cols))

labels = np.zeros((num, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols:(ind[i] + 1) * rows *

cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

Module definition and process function’s definition

class QModel(Module):

def __init__(self):

super().__init__()

self.vq = Quanvolution([4, 2], (2, 2))

self.fc = Linear(4 * 14 * 14, 10)

def forward(self, x):

x = self.vq(x)

x = tensor.flatten(x, 1)

x = self.fc(x)

x = tensor.log_softmax(x)

return x

def run_quanvolution():

digit = 10

x_train, y_train = load_mnist("training_data", digits=np.arange(digit))

x_train = x_train / 255

y_train = y_train.flatten()

x_test, y_test = load_mnist("testing_data", digits=np.arange(digit))

x_test = x_test / 255

y_test = y_test.flatten()

x_train = x_train[:500]

y_train = y_train[:500]

x_test = x_test[:100]

y_test = y_test[:100]

print("model start")

model = QModel()

optimizer = Adam(model.parameters(), lr=5e-3)

model.train()

result_file = open("quanvolution.txt", "w")

cceloss = NLL_Loss()

N_EPOCH = 15

for epoch in range(1, N_EPOCH):

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 10

correct = 0

for x, y in data_generator(x_train,

y_train,

batch_size=batch_size,

shuffle=True):

optimizer.zero_grad()

try:

x = x.reshape(batch_size, 1, 28, 28)

except:

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = cceloss(y, output)

print(f"loss {loss}")

loss.backward()

optimizer._step()

full_loss += loss.item()

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y

correct += sum(mask)

print(f"correct {correct}")

print(f"Train Accuracy: {correct/n_loss}%")

print(f"Epoch: {epoch}, Loss: {full_loss / n_loss}")

result_file.write(f"{epoch}\t{full_loss / n_loss}\t{correct/n_loss}\t")

# Evaluation

model.eval()

print("eval")

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test,

y_test,

batch_size=batch_size,

shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = cceloss(y, output)

full_loss += loss.item()

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y

correct += sum(mask)

n_eval += 1

n_loss += 1

print(f"Eval Accuracy: {correct/n_eval}")

result_file.write(f"{full_loss / n_loss}\t{correct/n_eval}\n")

result_file.close()

del model

print("\ndone\n")

if __name__ == "__main__":

run_quanvolution()

Training set, verification set loss, training set, verification set classification accuracy with Epoch transformation.

# epoch train_loss train_accuracy eval_loss eval_accuracy

# 1 0.2488900272846222 0.232 1.7297331787645818 0.39

# 2 0.12281704187393189 0.646 1.201728610806167 0.61

# 3 0.08001763761043548 0.772 0.8947569639235735 0.73

# 4 0.06211201059818268 0.83 0.777864265316166 0.74

# 5 0.052190632969141004 0.858 0.7291000287979841 0.76

# 6 0.04542196464538574 0.87 0.6764470228599384 0.8

# 7 0.04029472427070141 0.896 0.6153804161818698 0.79

# 8 0.03600500610470772 0.902 0.5644993982824963 0.81

# 9 0.03230033944547176 0.916 0.528938240573043 0.81

# 10 0.02912954458594322 0.93 0.5058713140769396 0.83

# 11 0.026443827204406262 0.936 0.49064547760412097 0.83

# 12 0.024144304402172564 0.942 0.4800815625616815 0.82

# 13 0.022141477409750223 0.952 0.4724775951183983 0.83

# 14 0.020372112181037665 0.956 0.46692863543197743 0.83

Quantum AutoEncoder Demo¶

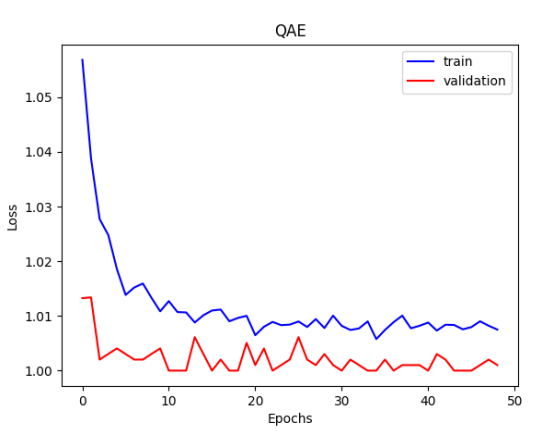

1.Quantum AutoEncoder¶

The classic autoencoder is a neural network that can learn high-efficiency low-dimensional representations of data in a high-dimensional space. The task of the autoencoder is to map x to a low-dimensional point y given an input x, so that x can be recovered from y. The structure of the underlying autoencoder network can be selected to represent the data in a smaller dimension, thereby effectively compressing the input. Inspired by this idea, the model of quantum autoencoder is used to perform similar tasks on quantum data. Quantum autoencoders are trained to compress specific data sets of quantum states, and classical compression algorithms cannot be used. The parameters of the quantum autoencoder are trained using classical optimization algorithms. We show an example of a simple programmable circuit, which can be trained as an efficient autoencoder. We apply our model in the context of quantum simulation to compress the Hubbard model and the ground state of the Hamiltonian. This algorithm is based on Quantum autoencoders for efficient compression of quantum data .

QAE quantum circuits:

import os

import sys

sys.path.insert(0,'../')

import numpy as np

from pyvqnet.nn.module import Module

from pyvqnet.nn.loss import fidelityLoss

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.qnn.qae.qae import QAElayer

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use('TkAgg')

except:

pass

try:

import urllib.request

except ImportError:

raise ImportError('You should use Python 3.x')

import os.path

import gzip

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

def _download(dataset_dir,file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

file_path_ungz = file_path_ungz.replace('-idx', '.idx')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir,v)

class Model(Module):

def __init__(self, trash_num: int = 2, total_num: int = 7):

super().__init__()

self.pqc = QAElayer(trash_num, total_num)

def forward(self, x):

x = self.pqc(x)

return x

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"):

import os, struct

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, 'train-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 'train-labels.idx1-ubyte').replace('\\', '/')

elif dataset == "testing_data":

fname_image = os.path.join(path, 't10k-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 't10k-labels.idx1-ubyte').replace('\\', '/')

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, 'rb')

magic_nr, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, 'rb')

magic_nr, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

N = len(ind)

images = np.zeros((N, rows, cols))

labels = np.zeros((N, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols: (ind[i] + 1) * rows * cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def run2():

##load dataset

x_train, y_train = load_mnist("training_data")

x_train = x_train / 255

x_test, y_test = load_mnist("testing_data")

x_test = x_test / 255

x_train = x_train.reshape([-1, 1, 28, 28])

x_test = x_test.reshape([-1, 1, 28, 28])

x_train = x_train[:100, :, :, :]

x_train = np.resize(x_train, [x_train.shape[0], 1, 2, 2])

x_test = x_test[:10, :, :, :]

x_test = np.resize(x_test, [x_test.shape[0], 1, 2, 2])

encode_qubits = 4

latent_qubits = 2

trash_qubits = encode_qubits - latent_qubits

total_qubits = 1 + trash_qubits + encode_qubits

print("model start")

model = Model(trash_qubits, total_qubits)

optimizer = Adam(model.parameters(), lr=0.005)

model.train()

F1 = open("rlt.txt", "w")

loss_list = []

loss_list_test = []

fidelity_train = []

fidelity_val = []

for epoch in range(1, 10):

running_fidelity_train = 0

running_fidelity_val = 0

print(f"epoch {epoch}")

model.train()

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

correct = 0

iter = 0

if epoch %5 ==1:

optimizer.lr = optimizer.lr *0.5

for x, y in data_generator(x_train, y_train, batch_size=batch_size, shuffle=True): #shuffle batch rather than data

x = x.reshape((-1, encode_qubits))

x = np.concatenate((np.zeros([batch_size, 1 + trash_qubits]), x), 1)

optimizer.zero_grad()

output = model(x)

iter += 1

np_out = np.array(output.data)

floss = fidelityLoss()

loss = floss(output)

loss_data = np.array(loss.data)

loss.backward()

running_fidelity_train += np_out[0]

optimizer._step()

full_loss += loss_data[0]

n_loss += batch_size

np_output = np.array(output.data, copy=False)

mask = np_output.argmax(1) == y.argmax(1)

correct += sum(mask)

loss_output = full_loss / n_loss

print(f"Epoch: {epoch}, Loss: {loss_output}")

loss_list.append(loss_output)

# Evaluation

model.eval()

correct = 0

full_loss = 0

n_loss = 0

n_eval = 0

batch_size = 1

for x, y in data_generator(x_test, y_test, batch_size=batch_size, shuffle=True):

x = x.reshape((-1, encode_qubits))

x = np.concatenate((np.zeros([batch_size, 1 + trash_qubits]),x),1)

output = model(x)

floss = fidelityLoss()

loss = floss(output)

loss_data = np.array(loss.data)

full_loss += loss_data[0]

running_fidelity_val += np.array(output.data)[0]

n_eval += 1

n_loss += 1

loss_output = full_loss / n_loss

print(f"Epoch: {epoch}, Loss: {loss_output}")

loss_list_test.append(loss_output)

fidelity_train.append(running_fidelity_train / 64)

fidelity_val.append(running_fidelity_val / 64)

figure_path = os.path.join(os.getcwd(), 'QAE-rate1.png')

plt.plot(loss_list, color="blue", label="train")

plt.plot(loss_list_test, color="red", label="validation")

plt.title('QAE')

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend(loc="upper right")

plt.savefig(figure_path)

plt.show()

F1.write(f"done\n")

F1.close()

del model

if __name__ == '__main__':

run2()

The QAE error value obtained by running the above code, the loss is 1/fidelity, tending to 1 means the fidelity is close to 1.

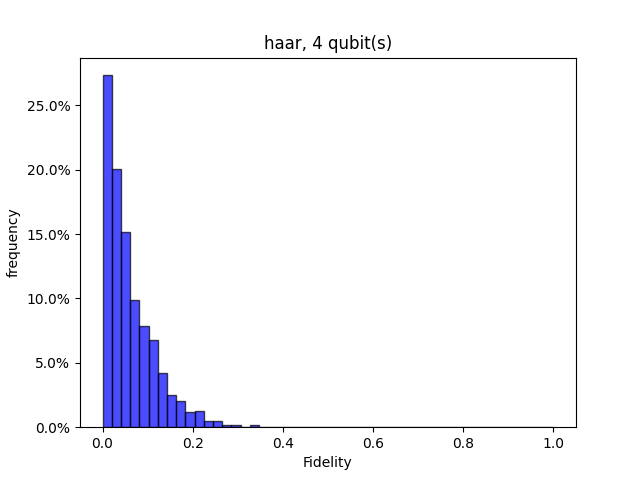

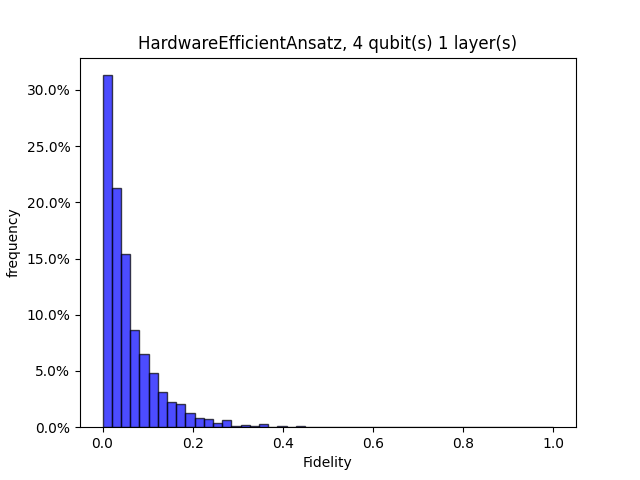

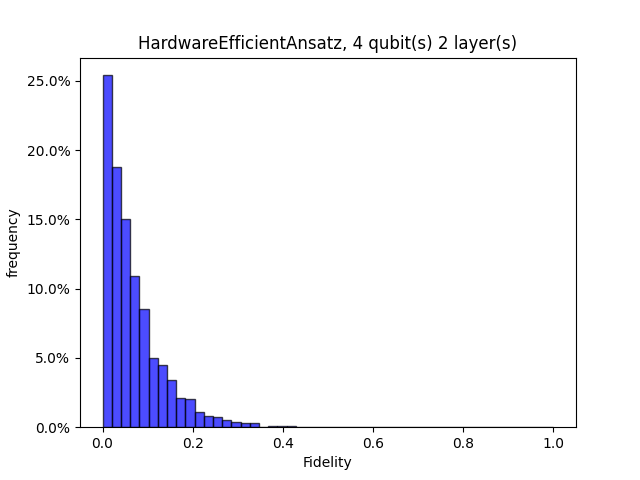

Quantum Circuits Structure Learning Demo¶

1.Quantum circuits structure learning¶

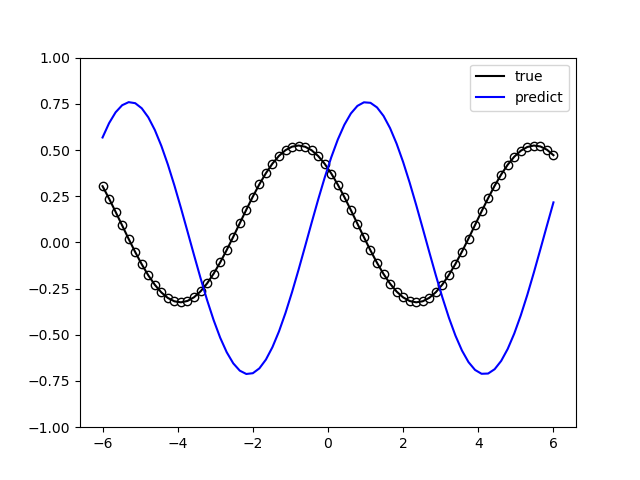

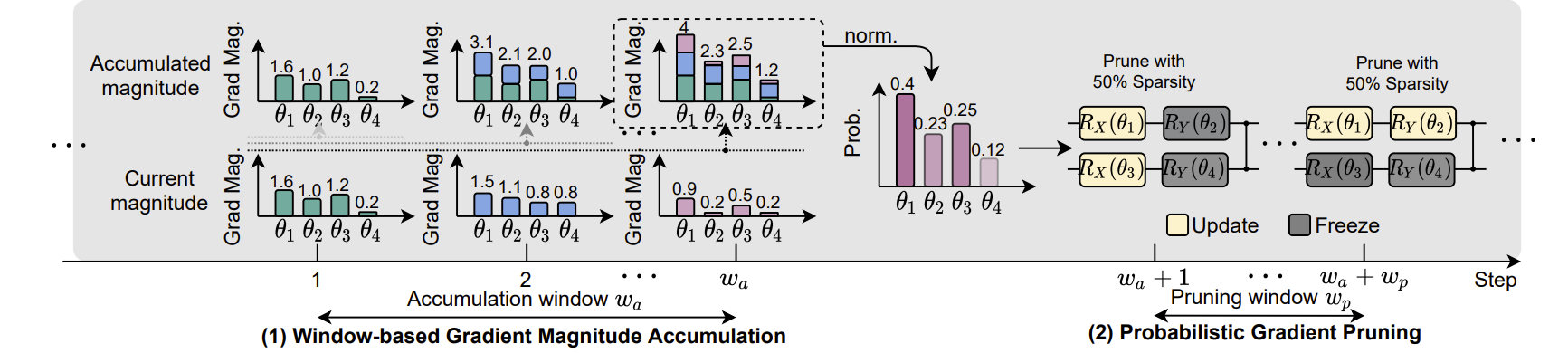

In the quantum circuit structure, the most frequently used quantum gates with parameters are RZ , RY , and RX gates, but which gate to use under what circumstances is a question worth studying. One method is random selection, but in this case It is very likely that the best results will not be achieved. The core goal of Quantum circuit structure learning task is to find the optimal combination of quantum gates with parameters. The approach here is that this set of optimal quantum logic gates should make the loss function to be the minimum.

"""

Quantum Circuits Strcture Learning Demo

"""

import sys

sys.path.insert(0,"../")

import copy

import pyqpanda as pq

from pyvqnet.tensor.tensor import QTensor

from pyvqnet.qnn.measure import expval

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

machine = pq.CPUQVM()

machine.init_qvm()

nqbits = machine.qAlloc_many(2)

def gen(param, generators, qbits, circuit):

if generators == "X":

circuit.insert(pq.RX(qbits, param))

elif generators == "Y":

circuit.insert(pq.RY(qbits, param))

else:

circuit.insert(pq.RZ(qbits, param))

def circuits(params, generators, circuit):

gen(params[0], generators[0], nqbits[0], circuit)

gen(params[1], generators[1], nqbits[1], circuit)

circuit.insert(pq.CNOT(nqbits[0], nqbits[1]))

prog = pq.QProg()

prog.insert(circuit)

return prog

def ansatz1(params: QTensor, generators):

circuit = pq.QCircuit()

params = params.to_numpy()

prog = circuits(params, generators, circuit)

return expval(machine, prog, {"Z0": 1},

nqbits), expval(machine, prog, {"Y1": 1}, nqbits)

def ansatz2(params: QTensor, generators):

circuit = pq.QCircuit()

params = params.to_numpy()

prog = circuits(params, generators, circuit)

return expval(machine, prog, {"X0": 1}, nqbits)

def loss(params, generators):

z, y = ansatz1(params, generators)

x = ansatz2(params, generators)

return 0.5 * y + 0.8 * z - 0.2 * x

def rotosolve(d, params, generators, cost, M_0):

"""

rotosolve algorithm implementation

"""

params[d] = np.pi / 2.0

m0_plus = cost(QTensor(params), generators)

params[d] = -np.pi / 2.0

m0_minus = cost(QTensor(params), generators)

a = np.arctan2(2.0 * M_0 - m0_plus - m0_minus,

m0_plus - m0_minus) # returns value in (-pi,pi]

params[d] = -np.pi / 2.0 - a

if params[d] <= -np.pi:

params[d] += 2 * np.pi

return cost(QTensor(params), generators)

def optimal_theta_and_gen_helper(index, params, generators):

"""

find optimal varaibles

"""

params[index] = 0.

m0 = loss(QTensor(params), generators) #init value

for kind in ["X", "Y", "Z"]:

generators[index] = kind

params_cost = rotosolve(index, params, generators, loss, m0)

if kind == "X" or params_cost <= params_opt_cost:

params_opt_d = params[index]

params_opt_cost = params_cost

generators_opt_d = kind

return params_opt_d, generators_opt_d

def rotoselect_cycle(params: np, generators):

for index in range(params.shape[0]):

params[index], generators[index] = optimal_theta_and_gen_helper(

index, params, generators)

return params, generators

params = QTensor(np.array([0.3, 0.25]))

params = params.to_numpy()

generator = ["X", "Y"]

generators = copy.deepcopy(generator)

epoch = 20

state_save = []

for i in range(epoch):

state_save.append(loss(QTensor(params), generators))

params, generators = rotoselect_cycle(params, generators)

print("Optimal generators are: {}".format(generators))

print("Optimal params are: {}".format(params))

steps = np.arange(0, epoch)

plt.plot(steps, state_save, "o-")

plt.title("rotoselect")

plt.xlabel("cycles")

plt.ylabel("cost")

plt.yticks(np.arange(-1.25, 0.80, 0.25))

plt.tight_layout()

plt.show()

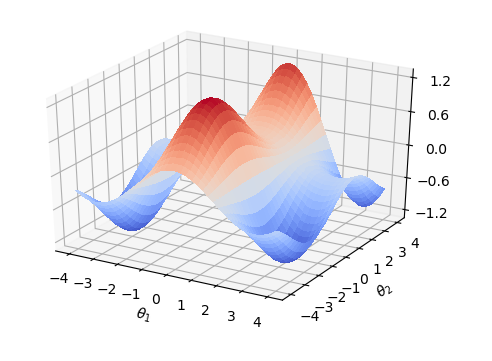

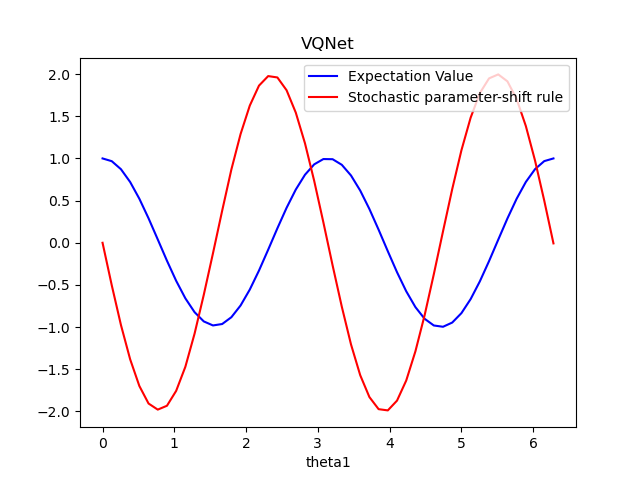

The quantum circuit structure obtained by running the above code contains \(RX\), one \(RY\)

And with the parameters in the quantum gate \(\theta_1\), \(\theta_2\) change,Loss function has different values.

Hybird Quantum Classic Nerual Network Demo¶

1.Hybrid Quantum Classic Neural Network Model¶

Machine learning (ML) has become a successful interdisciplinary field that aims to extract generalizable information from data mathematically. Quantum machine learning seeks to use the principles of quantum mechanics to enhance machine learning, and vice versa. Whether your goal is to enhance classical ML algorithms by outsourcing difficult calculations to quantum computers, or use classical ML architectures to optimize quantum algorithms-both fall into the category of quantum machine learning (QML). In this chapter, we will explore how to partially quantify classical neural networks to create hybrid quantum classical neural networks. Quantum circuits are composed of quantum logic gates, and the quantum calculations implemented by these logic gates are proved to be differentiable by the paper Quantum Circuit Learning. Therefore, researchers try to put quantum circuits and classical neural network modules together for training on hybrid quantum classical machine learning tasks. We will write a simple example to implement a neural network model training task using VQNet. The purpose of this example is to demonstrate the simplicity of VQNet and encourage ML practitioners to explore the possibilities of quantum computing.

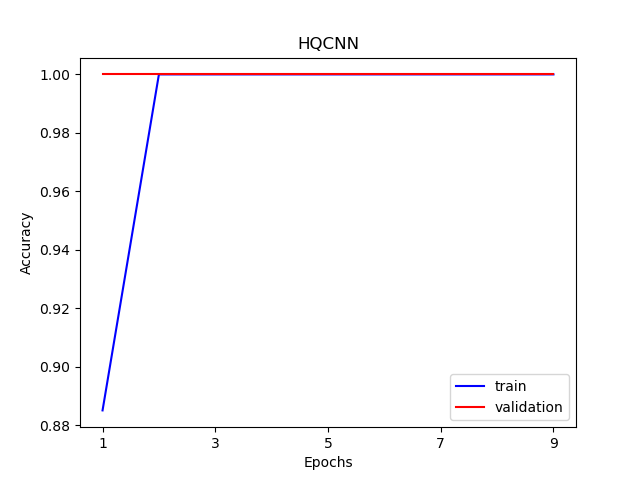

Data Preparation¶

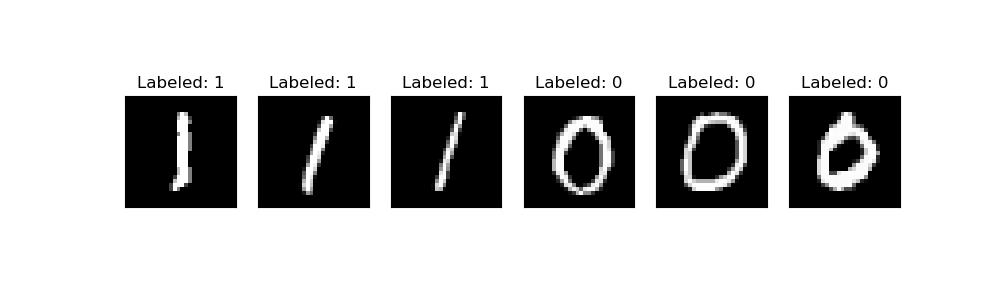

We will use MNIST datasets, the most basic neural network handwritten digit database as the classification data. We first load MNIST and filter data samples containing 0 and 1. These samples are divided into training data training_data and testing data testing_data, each of which has a dimension of 1*784.

import time

import os

import struct

import gzip

from pyvqnet.nn.module import Module

from pyvqnet.nn.linear import Linear

from pyvqnet.nn.conv import Conv2D

from pyvqnet.nn import activation as F

from pyvqnet.nn.pooling import MaxPool2D

from pyvqnet.nn.loss import CategoricalCrossEntropy

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.tensor import QTensor

import pyqpanda as pq

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

try:

matplotlib.use("TkAgg")

except:

print("Can not use matplot TkAgg")

pass

try:

import urllib.request

except ImportError:

raise ImportError("You should use Python 3.x")

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

def _download(dataset_dir,file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

file_path_ungz = file_path_ungz.replace('-idx', '.idx')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir,v)

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"):

import os, struct

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, 'train-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 'train-labels.idx1-ubyte').replace('\\', '/')

elif dataset == "testing_data":

fname_image = os.path.join(path, 't10k-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 't10k-labels.idx1-ubyte').replace('\\', '/')

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, 'rb')

magic_nr, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, 'rb')

magic_nr, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

N = len(ind)

images = np.zeros((N, rows, cols))

labels = np.zeros((N, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols: (ind[i] + 1) * rows * cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

def data_select(train_num, test_num):

x_train, y_train = load_mnist("training_data")

x_test, y_test = load_mnist("testing_data")

# Train Leaving only labels 0 and 1

idx_train = np.append(np.where(y_train == 0)[0][:train_num],

np.where(y_train == 1)[0][:train_num])

x_train = x_train[idx_train]

y_train = y_train[idx_train]

x_train = x_train / 255

y_train = np.eye(2)[y_train].reshape(-1, 2)

# Test Leaving only labels 0 and 1

idx_test = np.append(np.where(y_test == 0)[0][:test_num],

np.where(y_test == 1)[0][:test_num])

x_test = x_test[idx_test]

y_test = y_test[idx_test]

x_test = x_test / 255

y_test = np.eye(2)[y_test].reshape(-1, 2)

return x_train, y_train, x_test, y_test

n_samples_show = 6

x_train, y_train, x_test, y_test = data_select(100, 50)

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

for img ,targets in zip(x_test,y_test):

if n_samples_show <= 3:

break

if targets[0] == 1:

axes[n_samples_show - 1].set_title("Labeled: 0")

axes[n_samples_show - 1].imshow(img.squeeze(), cmap='gray')

axes[n_samples_show - 1].set_xticks([])

axes[n_samples_show - 1].set_yticks([])

n_samples_show -= 1

for img ,targets in zip(x_test,y_test):

if n_samples_show <= 0:

break

if targets[0] == 0:

axes[n_samples_show - 1].set_title("Labeled: 1")

axes[n_samples_show - 1].imshow(img.squeeze(), cmap='gray')

axes[n_samples_show - 1].set_xticks([])

axes[n_samples_show - 1].set_yticks([])

n_samples_show -= 1

plt.show()

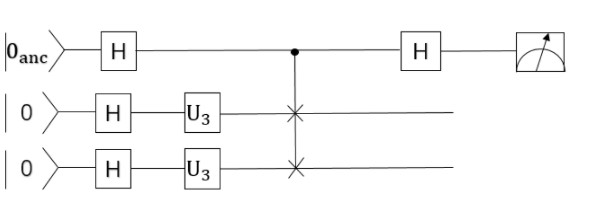

Construct Quantum Circuits¶

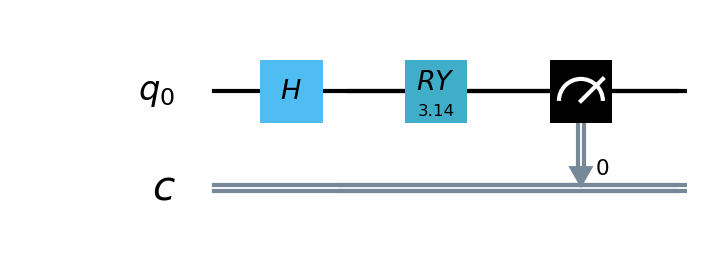

In this example, we use the pyQPanda2 , A simple quantum circuit of 1 qubit is defined. The circuit takes the output of the classical neural network layer as input,encodes quantum data through H , RY quantum logic gates, and calculates the expected value of Hamiltonian in the z direction as output.

from pyqpanda import *

import pyqpanda as pq

import numpy as np

def circuit(weights):

num_qubits = 1

#Use pyQPanda2 to create a simulator

machine = pq.CPUQVM()

machine.init_qvm()

#Use pyQPanda2 to alloc qubits

qubits = machine.qAlloc_many(num_qubits)

#Use pyQPanda2 to alloc classic bits

cbits = machine.cAlloc_many(num_qubits)

#Construct circuits

circuit = pq.QCircuit()

circuit.insert(pq.H(qubits[0]))

circuit.insert(pq.RY(qubits[0], weights[0]))

#Construct quantum program

prog = pq.QProg()

prog.insert(circuit)

#Defines measurement

prog << measure_all(qubits, cbits)

#run quantum with quantum measurements

result = machine.run_with_configuration(prog, cbits, 100)

counts = np.array(list(result.values()))

states = np.array(list(result.keys())).astype(float)

probabilities = counts / 100

expectation = np.sum(states * probabilities)

return expectation

Create Hybird Model¶

Since quantum circuits can perform automatic differentiation calculations together with classical neural networks,

Therefore, we can use VQNet’s convolutional layer Conv2D , pooling layer MaxPool2D , fully connected layer Linear and

the quantum circuit to build model just now.

The definition of the Net and Hybrid classes inherit from the VQNet automatic differentiation module Module

and the definition of the forward calculation is defined in forward function forward(),

An automatic differentiation Model of convolution, quantum encoding, and measurement of the MNIST data is constructed to obtain the final features required for the classification task.

#Quantum computing layer front pass and the definition of gradient calculation function, which need to be inherited from the abstract class Module

from pyvqnet.native.backprop_utils import AutoGradNode

class Hybrid(Module):

""" Hybrid quantum - Quantum layer definition """

def __init__(self, shift):

super(Hybrid, self).__init__()

self.shift = shift

def forward(self, input):

self.input = input

expectation_z = circuit(np.array(input.data))

result = [[expectation_z]]

requires_grad = input.requires_grad

def _backward(g, input):

""" Backward pass computation """

input_list = np.array(input.data)

shift_right = input_list + np.ones(input_list.shape) * self.shift

shift_left = input_list - np.ones(input_list.shape) * self.shift

gradients = []

for i in range(len(input_list)):

expectation_right = circuit(shift_right[i])

expectation_left = circuit(shift_left[i])

gradient = expectation_right - expectation_left

gradients.append(gradient)

gradients = np.array([gradients]).T

return gradients * np.array(g)

nodes = []

if input.requires_grad:

nodes.append(AutoGradNode(tensor=input, df=lambda g: _backward(g, input)))

return QTensor(data=result, requires_grad=requires_grad, nodes=nodes)

#Model definition

class Net(Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = Conv2D(input_channels=1, output_channels=6, kernel_size=(5, 5), stride=(1, 1), padding="valid")

self.maxpool1 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.conv2 = Conv2D(input_channels=6, output_channels=16, kernel_size=(5, 5), stride=(1, 1), padding="valid")

self.maxpool2 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.fc1 = Linear(input_channels=256, output_channels=64)

self.fc2 = Linear(input_channels=64, output_channels=1)

self.hybrid = Hybrid(np.pi / 2)

self.fc3 = Linear(input_channels=1, output_channels=2)

def forward(self, x):

x = F.ReLu()(self.conv1(x)) # 1 6 24 24

x = self.maxpool1(x)

x = F.ReLu()(self.conv2(x)) # 1 16 8 8

x = self.maxpool2(x)

x = tensor.flatten(x, 1) # 1 256

x = F.ReLu()(self.fc1(x)) # 1 64

x = self.fc2(x) # 1 1

x = self.hybrid(x)

x = self.fc3(x)

return x

Training and testing¶

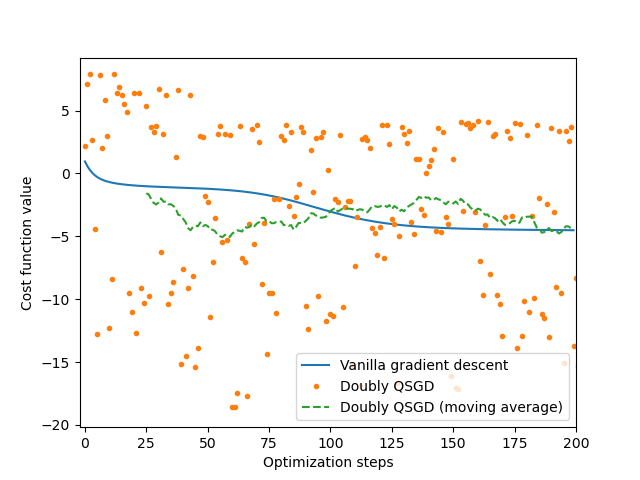

For the hybrid neural network model as shown in the figure below, we calculate the loss function by feeding data into the model iteratively, and VQNet will calculate the gradient of each parameter in the backward calculation automatically, and use the optimizer to optimize the parameters until the number of iterations meets the preset value.

x_train, y_train, x_test, y_test = data_select(1000, 100)

#Create a model

model = Net()

#Use adam optimizer

optimizer = Adam(model.parameters(), lr=0.005)

#Use cross entropy loss

loss_func = CategoricalCrossEntropy()

#train epoches

epochs = 10

train_loss_list = []

val_loss_list = []

train_acc_list =[]

val_acc_list = []

for epoch in range(1, epochs):

total_loss = []

model.train()

batch_size = 1

correct = 0

n_train = 0

for x, y in data_generator(x_train, y_train, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer.zero_grad()

output = model(x)

loss = loss_func(y, output)

loss_np = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_train += batch_size

loss.backward()

optimizer._step()

total_loss.append(loss_np)

train_loss_list.append(np.sum(total_loss) / len(total_loss))

train_acc_list.append(np.sum(correct) / n_train)

print("{:.0f} loss is : {:.10f}".format(epoch, train_loss_list[-1]))

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = loss_func(y, output)

loss_np = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

total_loss.append(loss_np)

print(f"Eval Accuracy: {correct / n_eval}")

val_loss_list.append(np.sum(total_loss) / len(total_loss))

val_acc_list.append(np.sum(correct) / n_eval)

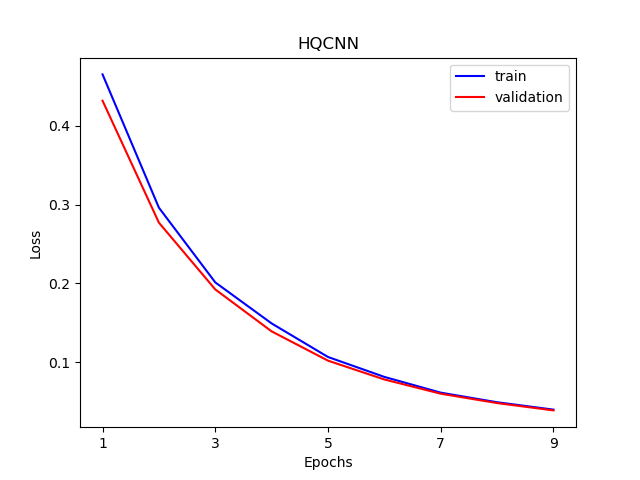

Visualization¶

The visualization curve of data loss function and accuracy on train and test data.

import os

plt.figure()

xrange = range(1,len(train_loss_list)+1)

figure_path = os.path.join(os.getcwd(), 'HQCNN LOSS.png')

plt.plot(xrange,train_loss_list, color="blue", label="train")

plt.plot(xrange,val_loss_list, color="red", label="validation")

plt.title('HQCNN')

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.xticks(np.arange(1, epochs +1,step = 2))

plt.legend(loc="upper right")

plt.savefig(figure_path)

plt.show()

plt.figure()

figure_path = os.path.join(os.getcwd(), 'HQCNN Accuracy.png')

plt.plot(xrange,train_acc_list, color="blue", label="train")

plt.plot(xrange,val_acc_list, color="red", label="validation")

plt.title('HQCNN')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.xticks(np.arange(1, epochs +1,step = 2))

plt.legend(loc="lower right")

plt.savefig(figure_path)

plt.show()

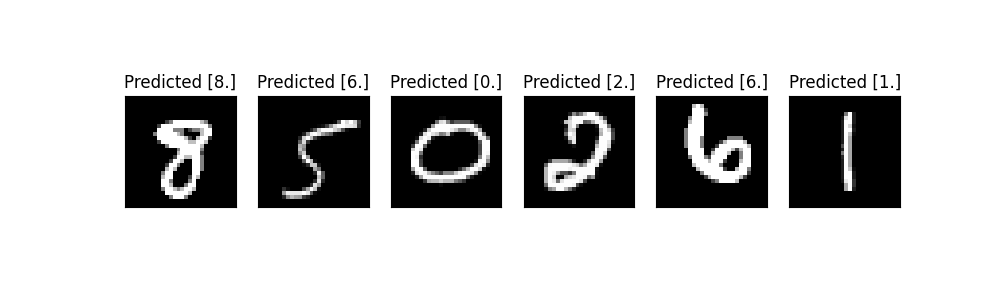

n_samples_show = 6

count = 0

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap='gray')

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title('Predicted {}'.format(np.array(pred.data)))

count += 1

plt.show()

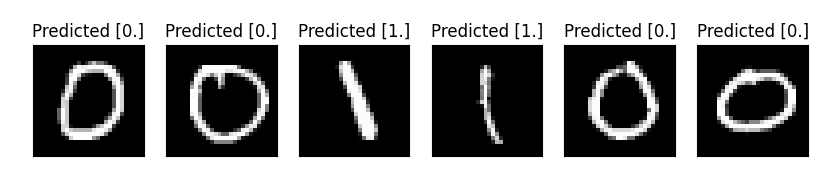

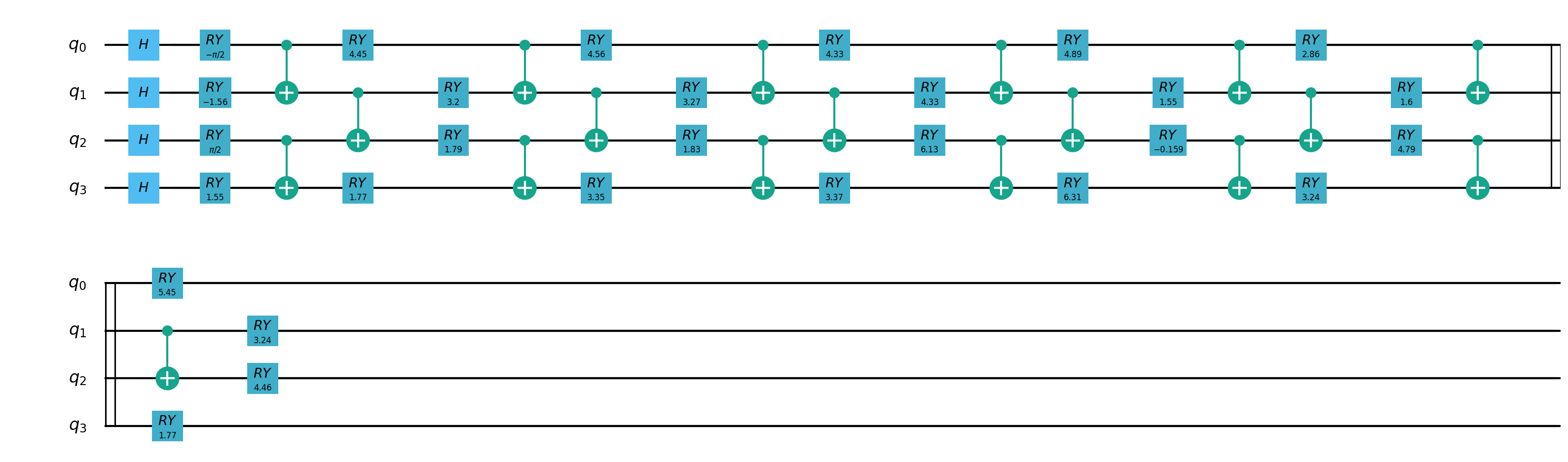

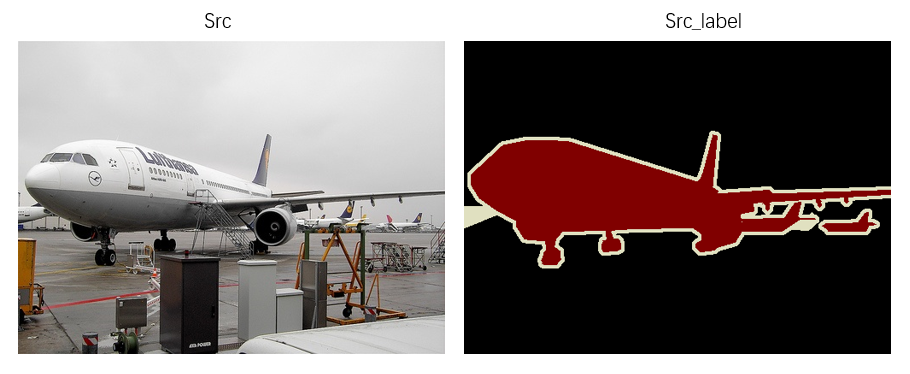

2.Hybrid quantum classical transfer learning model¶

We apply a machine learning method called transfer learning to image classifier based on hybrid classical quantum network. We will write a simple example of integrating pyQPanda2 with VQNet.Transfer learning is based on general intuition, that is, if the pre-trained network is good at solving a given problem, it can also be used to solve a different but related problem with only some additional training.

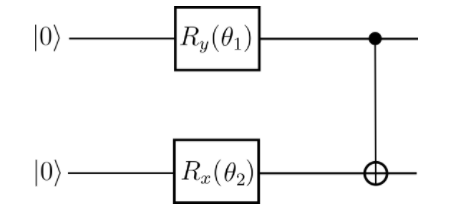

Quantum partial circuit diagram are illustrated below:

"""

Quantum Classic Nerual Network Transfer Learning demo

"""

import os

import sys

sys.path.insert(0,'../')

import numpy as np

import matplotlib.pyplot as plt

from pyvqnet.nn.module import Module

from pyvqnet.nn.linear import Linear

from pyvqnet.nn.conv import Conv2D

from pyvqnet.utils.storage import load_parameters, save_parameters

from pyvqnet.nn import activation as F

from pyvqnet.nn.pooling import MaxPool2D

from pyvqnet.nn.batch_norm import BatchNorm2d

from pyvqnet.nn.loss import SoftmaxCrossEntropy

from pyvqnet.optim.sgd import SGD

from pyvqnet.optim.adam import Adam

from pyvqnet.data.data import data_generator

from pyvqnet.tensor import tensor

from pyvqnet.tensor.tensor import QTensor

import pyqpanda as pq

from pyqpanda import *

import matplotlib

from pyvqnet.nn.module import *

from pyvqnet.utils.initializer import *

from pyvqnet.qnn.quantumlayer import QuantumLayer

try:

matplotlib.use('TkAgg')

except:

pass

try:

import urllib.request

except ImportError:

raise ImportError('You should use Python 3.x')

import os.path

import gzip

url_base = 'https://ossci-datasets.s3.amazonaws.com/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

def _download(dataset_dir,file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

if os.path.exists(file_path):

with gzip.GzipFile(file_path) as f:

file_path_ungz = file_path[:-3].replace('\\', '/')

file_path_ungz = file_path_ungz.replace('-idx', '.idx')

if not os.path.exists(file_path_ungz):

open(file_path_ungz,"wb").write(f.read())

print("Done")

def download_mnist(dataset_dir):

for v in key_file.values():

_download(dataset_dir,v)

if not os.path.exists("./result"):

os.makedirs("./result")

else:

pass

# classical CNN

class CNN(Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = Conv2D(input_channels=1, output_channels=16, kernel_size=(3, 3), stride=(1, 1), padding="valid")

self.BatchNorm2d1 = BatchNorm2d(16)

self.Relu1 = F.ReLu()

self.conv2 = Conv2D(input_channels=16, output_channels=32, kernel_size=(3, 3), stride=(1, 1), padding="valid")

self.BatchNorm2d2 = BatchNorm2d(32)

self.Relu2 = F.ReLu()

self.maxpool2 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.conv3 = Conv2D(input_channels=32, output_channels=64, kernel_size=(3, 3), stride=(1, 1), padding="valid")

self.BatchNorm2d3 = BatchNorm2d(64)

self.Relu3 = F.ReLu()

self.conv4 = Conv2D(input_channels=64, output_channels=128, kernel_size=(3, 3), stride=(1, 1), padding="valid")

self.BatchNorm2d4 = BatchNorm2d(128)

self.Relu4 = F.ReLu()

self.maxpool4 = MaxPool2D([2, 2], [2, 2], padding="valid")

self.fc1 = Linear(input_channels=128 * 4 * 4, output_channels=1024)

self.fc2 = Linear(input_channels=1024, output_channels=128)

self.fc3 = Linear(input_channels=128, output_channels=10)

def forward(self, x):

x = self.Relu1(self.conv1(x))

x = self.maxpool2(self.Relu2(self.conv2(x)))

x = self.Relu3(self.conv3(x))

x = self.maxpool4(self.Relu4(self.conv4(x)))

x = tensor.flatten(x, 1)

x = F.ReLu()(self.fc1(x)) # 1 64

x = F.ReLu()(self.fc2(x)) # 1 64

x = self.fc3(x) # 1 1

return x

def load_mnist(dataset="training_data", digits=np.arange(2), path="./"):

import os, struct

from array import array as pyarray

download_mnist(path)

if dataset == "training_data":

fname_image = os.path.join(path, 'train-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 'train-labels.idx1-ubyte').replace('\\', '/')

elif dataset == "testing_data":

fname_image = os.path.join(path, 't10k-images.idx3-ubyte').replace('\\', '/')

fname_label = os.path.join(path, 't10k-labels.idx1-ubyte').replace('\\', '/')

else:

raise ValueError("dataset must be 'training_data' or 'testing_data'")

flbl = open(fname_label, 'rb')

magic_nr, size = struct.unpack(">II", flbl.read(8))

lbl = pyarray("b", flbl.read())

flbl.close()

fimg = open(fname_image, 'rb')

magic_nr, size, rows, cols = struct.unpack(">IIII", fimg.read(16))

img = pyarray("B", fimg.read())

fimg.close()

ind = [k for k in range(size) if lbl[k] in digits]

N = len(ind)

images = np.zeros((N, rows, cols))

labels = np.zeros((N, 1), dtype=int)

for i in range(len(ind)):

images[i] = np.array(img[ind[i] * rows * cols: (ind[i] + 1) * rows * cols]).reshape((rows, cols))

labels[i] = lbl[ind[i]]

return images, labels

"""

to get cnn model parameters for transfer learning

"""

train_size = 10000

eval_size = 1000

EPOCHES = 100

def classcal_cnn_model_making():

# load train data

x_train, y_train = load_mnist("training_data", digits=np.arange(10))

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:train_size]

y_train = y_train[:train_size]

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

optimizer = SGD(model.parameters(), lr=0.005)

loss_func = SoftmaxCrossEntropy()

epochs = EPOCHES

loss_list = []

model.train()

SAVE_FLAG = True

temp_loss = 0

for epoch in range(1, epochs):

total_loss = []

for x, y in data_generator(x_train, y_train, batch_size=4, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer.zero_grad()

# Forward pass

output = model(x)

# Calculating loss

loss = loss_func(y, output) # target output

loss_np = np.array(loss.data)

# Backward pass

loss.backward()

# Optimize the weights

optimizer._step()

total_loss.append(loss_np)

loss_list.append(np.sum(total_loss) / len(total_loss))

print("{:.0f} loss is : {:.10f}".format(epoch, loss_list[-1]))

if SAVE_FLAG:

temp_loss = loss_list[-1]

save_parameters(model.state_dict(), "./result/QCNN_TL_1.model")

SAVE_FLAG = False

else:

if temp_loss > loss_list[-1]:

temp_loss = loss_list[-1]

save_parameters(model.state_dict(), "./result/QCNN_TL_1.model")

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=4, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

loss = loss_func(y, output)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

print(f"Eval Accuracy: {correct / n_eval}")

n_samples_show = 6

count = 0

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap='gray')

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title('Predicted {}'.format(np.array(pred.data)))

count += 1

plt.show()

def classical_cnn_TransferLearning_predict():

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_test = x_test / 255

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

model_parameter = load_parameters("./result/QCNN_TL_1.model")

model.load_state_dict(model_parameter)

model.eval()

correct = 0

n_eval = 0

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

output = model(x)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

print(f"Eval Accuracy: {correct / n_eval}")

n_samples_show = 6

count = 0

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap='gray')

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title('Predicted {}'.format(np.array(pred.data)))

count += 1

plt.show()

def quantum_cnn_TransferLearning():

n_qubits = 4 # Number of qubits

q_depth = 6 # Depth of the quantum circuit (number of variational layers)

def Q_H_layer(qubits, nqubits):

"""Layer of single-qubit Hadamard gates.

"""

circuit = pq.QCircuit()

for idx in range(nqubits):

circuit.insert(pq.H(qubits[idx]))

return circuit

def Q_RY_layer(qubits, w):

"""Layer of parametrized qubit rotations around the y axis.

"""

circuit = pq.QCircuit()

for idx, element in enumerate(w):

circuit.insert(pq.RY(qubits[idx], element))

return circuit

def Q_entangling_layer(qubits, nqubits):

"""Layer of CNOTs followed by another shifted layer of CNOT.

"""

# In other words it should apply something like :

# CNOT CNOT CNOT CNOT... CNOT

# CNOT CNOT CNOT... CNOT

circuit = pq.QCircuit()

for i in range(0, nqubits - 1, 2): # Loop over even indices: i=0,2,...N-2

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

for i in range(1, nqubits - 1, 2): # Loop over odd indices: i=1,3,...N-3

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

return circuit

def Q_quantum_net(q_input_features, q_weights_flat, qubits, cubits, machine):

"""

The variational quantum circuit.

"""

machine = pq.CPUQVM()

machine.init_qvm()

qubits = machine.qAlloc_many(n_qubits)

circuit = pq.QCircuit()

# Reshape weights

q_weights = q_weights_flat.reshape([q_depth, n_qubits])

# Start from state |+> , unbiased w.r.t. |0> and |1>

circuit.insert(Q_H_layer(qubits, n_qubits))

# Embed features in the quantum node

circuit.insert(Q_RY_layer(qubits, q_input_features))

# Sequence of trainable variational layers

for k in range(q_depth):

circuit.insert(Q_entangling_layer(qubits, n_qubits))

circuit.insert(Q_RY_layer(qubits, q_weights[k]))

# Expectation values in the Z basis

prog = pq.QProg()

prog.insert(circuit)

exp_vals = []

for position in range(n_qubits):

pauli_str = "Z" + str(position)

pauli_map = pq.PauliOperator(pauli_str, 1)

hamiltion = pauli_map.toHamiltonian(True)

exp = machine.get_expectation(prog, hamiltion, qubits)

exp_vals.append(exp)

return exp_vals

class Q_DressedQuantumNet(Module):

def __init__(self):

"""

Definition of the *dressed* layout.

"""

super().__init__()

self.pre_net = Linear(128, n_qubits)

self.post_net = Linear(n_qubits, 10)

self.temp_Q = QuantumLayer(Q_quantum_net, q_depth * n_qubits, "cpu", n_qubits, n_qubits)

def forward(self, input_features):

"""

Defining how tensors are supposed to move through the *dressed* quantum

net.

"""

# obtain the input features for the quantum circuit

# by reducing the feature dimension from 512 to 4

pre_out = self.pre_net(input_features)

q_in = tensor.tanh(pre_out) * np.pi / 2.0

q_out_elem = self.temp_Q(q_in)

result = q_out_elem

# return the two-dimensional prediction from the postprocessing layer

return self.post_net(result)

x_train, y_train = load_mnist("training_data", digits=np.arange(10))

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:train_size]

y_train = y_train[:train_size]

x_test = x_test[:eval_size]

y_test = y_test[:eval_size]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

model_param = load_parameters("./result/QCNN_TL_1.model")

model.load_state_dict(model_param)

loss_func = SoftmaxCrossEntropy()

epochs = EPOCHES

loss_list = []

eval_losses = []

model_hybrid = model

print(model_hybrid)

for param in model_hybrid.parameters():

param.requires_grad = False

model_hybrid.fc3 = Q_DressedQuantumNet()

optimizer_hybrid = Adam(model_hybrid.fc3.parameters(), lr=0.001)

model_hybrid.train()

SAVE_FLAG = True

temp_loss = 0

for epoch in range(1, epochs):

total_loss = []

for x, y in data_generator(x_train, y_train, batch_size=4, shuffle=True):

x = x.reshape(-1, 1, 28, 28)

optimizer_hybrid.zero_grad()

# Forward pass

output = model_hybrid(x)

loss = loss_func(y, output) # target output

loss_np = np.array(loss.data)

# Backward pass

loss.backward()

# Optimize the weights

optimizer_hybrid._step()

total_loss.append(loss_np)

loss_list.append(np.sum(total_loss) / len(total_loss))

print("{:.0f} loss is : {:.10f}".format(epoch, loss_list[-1]))

if SAVE_FLAG:

temp_loss = loss_list[-1]

save_parameters(model_hybrid.fc3.state_dict(), "./result/QCNN_TL_FC3.model")

save_parameters(model_hybrid.state_dict(), "./result/QCNN_TL_ALL.model")

SAVE_FLAG = False

else:

if temp_loss > loss_list[-1]:

temp_loss = loss_list[-1]

save_parameters(model_hybrid.fc3.state_dict(), "./result/QCNN_TL_FC3.model")

save_parameters(model_hybrid.state_dict(), "./result/QCNN_TL_ALL.model")

correct = 0

n_eval = 0

loss_temp =[]

for x1, y1 in data_generator(x_test, y_test, batch_size=4, shuffle=True):

x1 = x1.reshape(-1, 1, 28, 28)

output = model_hybrid(x1)

loss = loss_func(y1, output)

np_loss = np.array(loss.data)

np_output = np.array(output.data, copy=False)

mask = (np_output.argmax(1) == y1.argmax(1))

correct += np.sum(np.array(mask))

n_eval += 1

loss_temp.append(np_loss)

eval_losses.append(np.sum(loss_temp) / n_eval)

print("{:.0f} eval loss is : {:.10f}".format(epoch, eval_losses[-1]))

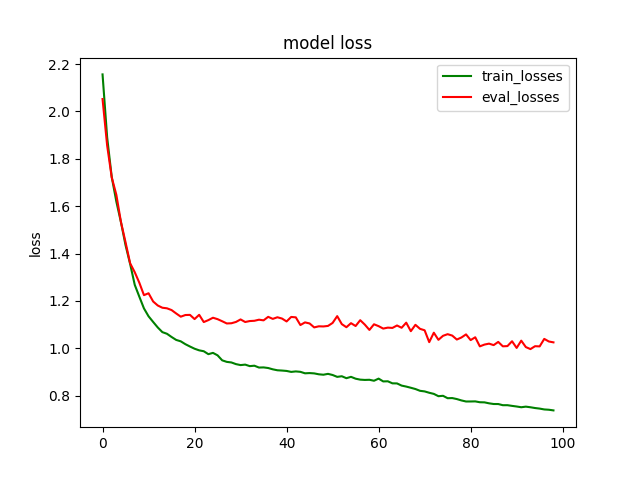

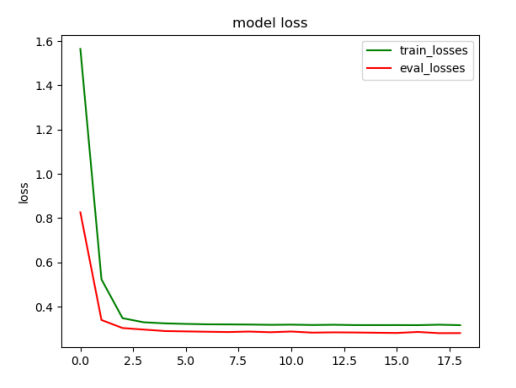

plt.title('model loss')

plt.plot(loss_list, color='green', label='train_losses')

plt.plot(eval_losses, color='red', label='eval_losses')

plt.ylabel('loss')

plt.legend(["train_losses", "eval_losses"])

plt.savefig("qcnn_transfer_learning_classical")

plt.show()

plt.close()

n_samples_show = 6

count = 0

fig, axes = plt.subplots(nrows=1, ncols=n_samples_show, figsize=(10, 3))

model_hybrid.eval()

for x, y in data_generator(x_test, y_test, batch_size=1, shuffle=True):

if count == n_samples_show:

break

x = x.reshape(-1, 1, 28, 28)

output = model_hybrid(x)

pred = QTensor.argmax(output, [1],False)

axes[count].imshow(x[0].squeeze(), cmap='gray')

axes[count].set_xticks([])

axes[count].set_yticks([])

axes[count].set_title('Predicted {}'.format(np.array(pred.data)))

count += 1

plt.show()

def quantum_cnn_TransferLearning_predict():

n_qubits = 4 # Number of qubits

q_depth = 6 # Depth of the quantum circuit (number of variational layers)

def Q_H_layer(qubits, nqubits):

"""Layer of single-qubit Hadamard gates.

"""

circuit = pq.QCircuit()

for idx in range(nqubits):

circuit.insert(pq.H(qubits[idx]))

return circuit

def Q_RY_layer(qubits, w):

"""Layer of parametrized qubit rotations around the y axis.

"""

circuit = pq.QCircuit()

for idx, element in enumerate(w):

circuit.insert(pq.RY(qubits[idx], element))

return circuit

def Q_entangling_layer(qubits, nqubits):

"""Layer of CNOTs followed by another shifted layer of CNOT.

"""

# In other words it should apply something like :

# CNOT CNOT CNOT CNOT... CNOT

# CNOT CNOT CNOT... CNOT

circuit = pq.QCircuit()

for i in range(0, nqubits - 1, 2): # Loop over even indices: i=0,2,...N-2

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

for i in range(1, nqubits - 1, 2): # Loop over odd indices: i=1,3,...N-3

circuit.insert(pq.CNOT(qubits[i], qubits[i + 1]))

return circuit

def Q_quantum_net(q_input_features, q_weights_flat, qubits, cubits, machine):

"""

The variational quantum circuit.

"""

machine = pq.CPUQVM()

machine.init_qvm()

qubits = machine.qAlloc_many(n_qubits)

circuit = pq.QCircuit()

# Reshape weights

q_weights = q_weights_flat.reshape([q_depth, n_qubits])

# Start from state |+> , unbiased w.r.t. |0> and |1>

circuit.insert(Q_H_layer(qubits, n_qubits))

# Embed features in the quantum node

circuit.insert(Q_RY_layer(qubits, q_input_features))

# Sequence of trainable variational layers

for k in range(q_depth):

circuit.insert(Q_entangling_layer(qubits, n_qubits))

circuit.insert(Q_RY_layer(qubits, q_weights[k]))

# Expectation values in the Z basis

prog = pq.QProg()

prog.insert(circuit)

exp_vals = []

for position in range(n_qubits):

pauli_str = "Z" + str(position)

pauli_map = pq.PauliOperator(pauli_str, 1)

hamiltion = pauli_map.toHamiltonian(True)

exp = machine.get_expectation(prog, hamiltion, qubits)

exp_vals.append(exp)

return exp_vals

class Q_DressedQuantumNet(Module):

def __init__(self):

"""

Definition of the *dressed* layout.

"""

super().__init__()

self.pre_net = Linear(128, n_qubits)

self.post_net = Linear(n_qubits, 10)

self.temp_Q = QuantumLayer(Q_quantum_net, q_depth * n_qubits, "cpu", n_qubits, n_qubits)

def forward(self, input_features):

"""

Defining how tensors are supposed to move through the *dressed* quantum

net.

"""

# obtain the input features for the quantum circuit

# by reducing the feature dimension from 512 to 4

pre_out = self.pre_net(input_features)

q_in = tensor.tanh(pre_out) * np.pi / 2.0

q_out_elem = self.temp_Q(q_in)

result = q_out_elem

# return the two-dimensional prediction from the postprocessing layer

return self.post_net(result)

x_train, y_train = load_mnist("training_data", digits=np.arange(10))

x_test, y_test = load_mnist("testing_data", digits=np.arange(10))

x_train = x_train[:2000]

y_train = y_train[:2000]

x_test = x_test[:500]

y_test = y_test[:500]

x_train = x_train / 255

x_test = x_test / 255

y_train = np.eye(10)[y_train].reshape(-1, 10)

y_test = np.eye(10)[y_test].reshape(-1, 10)

model = CNN()

model_hybrid = model

model_hybrid.fc3 = Q_DressedQuantumNet()

for param in model_hybrid.parameters():

param.requires_grad = False

model_param_quantum = load_parameters("./result/QCNN_TL_ALL.model")

model_hybrid.load_state_dict(model_param_quantum)

model_hybrid.eval()

loss_func = SoftmaxCrossEntropy()

eval_losses = []

correct = 0

n_eval = 0